Cornell And NTT Researchers Introduces Deep Physical Neural Networks To Train Physical Systems To Perform Machine Learning Computations Using Backpropagation

Deep-learning models have become commonplace in all fields of research and engineering. However, their energy requirements are limiting their ability to scale. Synergistic hardware has contributed to the widespread use of deep neural networks (DNNs). DNN ‘accelerators,’ generally based on direct mathematical isomorphism between the hardware physics and the mathematical processes in DNNs, have been inspired by the developing DNN energy problem.

One of the methods to achieve effective machine learning is to apply trained mathematical transformations by designing hardware for tight, operation-by-operation mathematical isomorphism. A more efficient way would be to directly train the physical changes of the hardware to perform specific computations.

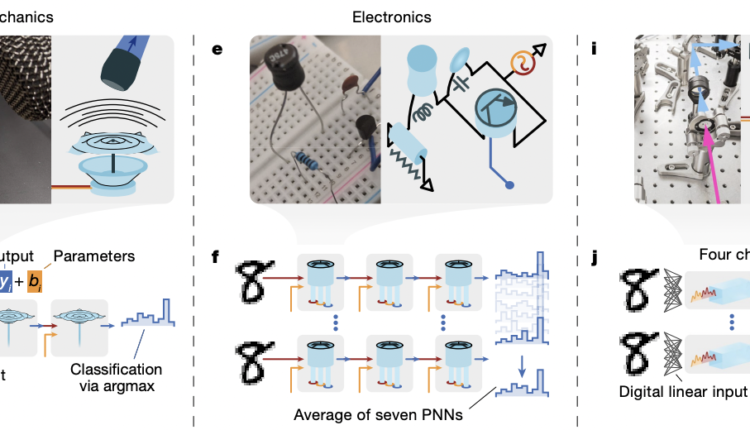

Cornell and NTT researchers introduce a new technique to train physical systems, such as computer speakers and lasers, to do machine-learning computations like recognizing scribbled numerals and spoken vowel sounds. They demonstrate a viable alternative to conventional electronic processors by converting three physical systems(mechanical, optical, and electrical) into the same kind of neural networks that power services like Google Translate and online searches. This alternative has the potential to be orders of magnitude faster and more energy-efficient compared to power-hungry chips supporting multiple artificial intelligence applications.

Artificial neural networks work by applying a sequence of parameterized functions to input data in a mathematical way. In their study, the researchers investigate if physical systems might be used to execute computations more efficiently or quickly than traditional computers. They aimed at determining how to apply machine learning to various physical systems in a generic method that could be applied to any system.

The mechanical system was created by placing a titanium plate over a commercially available speaker, resulting in a driven multimode mechanical oscillator, as defined by physics. The optical system consisted of a laser blasted through a nonlinear crystal that combined pairs of photons to convert the colors of incoming light into new hues. The third experiment employed a simple electronic circuit consisting of only four components: a resistor, a capacitor, an inductor, and a transistor.

The pixels of a handwritten number image was encoded in a pulse of light or an electrical voltage sent into the apparatus in each trial. The system analyzed the data and produced an optical pulse or voltage similarly. Notably, the systems have to be trained to do the proper processing. So the researchers tweaked particular input parameters and ran many samples through the physical system—such as different numbers in different handwriting—before using a laptop computer to figure out how the parameters should be adjusted to get the highest accuracy for the assignment.

The researchers here use Physical neural networks (PNNs), a neural network that emphasizes the training of physical processes rather than mathematical operations. This divergence isn’t just semantic: by obliterating the traditional software-hardware divide, PNNs enable the construction of neural network hardware from nearly any controlled physical system.

They employ a backpropagation-based universal framework to train arbitrary physical systems. The physics-aware training method (PAT) is made possible by a hybrid in situ–in silico methodology. PAT enables the efficient and precise execution of the backpropagation algorithm on any sequence of physical input-output changes.

The PAT method trains the system in the following steps:

- Training input data (for example, an image) and trainable parameters are sent into the physical system.

- The physical system uses its transformation to produce an output in the forward pass.

- To calculate the error, the physical output is compared to the anticipated output.

- The loss gradient is evaluated with respect to the controllable parameters using a differentiable digital model.

- Finally, the parameters are changed based on the gradient inferred. Iteratively repeating these steps over the training sample helps lower the error.

The main benefit of PAT is that the forward pass is performed by genuine physical hardware rather than a simulation.

The researchers have publicly released their Physics-Aware-Training algorithm so that others might use it to turn their own physical systems into neural networks. The training algorithm is broad enough to be used in practically any such system, including fluids and unusual materials. In addition, several systems can be linked together to exploit each of the most useful processing capabilities.

This study suggests that any physical system can be transformed into a neural network. However, it’s crucial to determine which physical systems are ideal for critical machine-learning tasks. The team states that they are now working forward on devising a strategy for determining the answer.

Paper: https://www.nature.com/articles/s41586-021-04223-6.pdf

Suggested

Credit: Source link

Comments are closed.