Nvidia AI Research Team Presents A Deep Reinforcement Learning (RL) Based Approach To Create Smaller And Faster Circuits

There is a law known as Moore’s law, which states that the number of transistors on a microchip doubles every two years. And as Moore’s law slows, it becomes more vital to create alternative techniques for improving chip performance at the same technological process node.

NVIDIA has revealed a new method that uses artificial intelligence to build smaller, quicker, and more efficient circuits to give an increased performance with each new generation of chips. It demonstrates that AI is capable of learning to create these circuits from the ground up in its work using Deep Reinforcement Learning.

According to the company, the most recent iteration of the NVIDIA Hopper GPU architecture has close to 13,000 different instances of AI-designed circuits. NVIDIA’s goal is to discover a solution to establish a balance using circuit size and delay in order to produce the circuit with the smallest possible area while maintaining the desired delay. Their study titled PrefixRL concentrates on arithmetic circuits termed parallel prefix circuits. The artificial intelligence agent is taught to create prefix graphs and simultaneously optimizes for the features of the final circuit formed from the graph.

The team at NVIDIA created an environment for prefix circuits in which the reinforcement learning agent has the ability to add or delete a node from the prefix graph. They educated an agent to maximize the efficiency of arithmetic circuits in terms of their area and their latency; the agent gets rewarded with the improvement in the related circuit area and latency at every step.

Since training the 64b case requires more than 32,000 GPU hours and the physical simulation of PrefixRL requires 256 CPUs for each GPU, NVIDIA developed Raptor. This distributed reinforcement learning platform utilizes NVIDIA hardware for industrial reinforcement learning and strengthens scalability and learning speed.

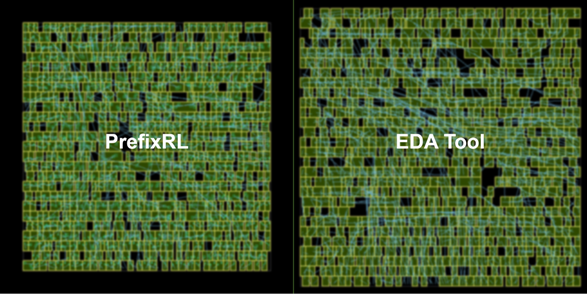

According to the findings, the most effective PrefixRL adder produced a 25 percent reduced area while operating at the same delay as the electronic design automation tool adder. NVIDIA hopes that the method can act as a blueprint for integrating Artificial Intelligence to real-world circuit design challenges, such as constructing action spaces, state representations, RL agent models, optimizing for multiple competing objectives, and overcoming slow reward computation processes as physical synthesis.

This Article is written as a summary article by Marktechpost Staff based on the research paper 'PrefixRL: Optimization of Parallel Prefix Circuits using Deep Reinforcement Learning'. All Credit For This Research Goes To Researchers on This Project. Checkout the paper and source article. Please Don't Forget To Join Our ML Subreddit

Credit: Source link

Comments are closed.