Researchers At Seoul National University Developed A Deep Learning Framework To Improve A Robotic Sketching Agent’s Skills

This article’s primary research objective was to develop something cool with non-rule-based techniques such as deep learning; they believed drawing is cool to display if the drawing performance is taught robot instead of a human. Recent advances in deep learning have produced astounding artistic results, but most of these techniques focus on generative models that make entire pixels at once.

Deep learning algorithms have recently produced amazing results in various fields, including the arts. In reality, a large number of computer scientists throughout the world have built models that can successfully produce artistic works, such as poems, paintings, and sketches.

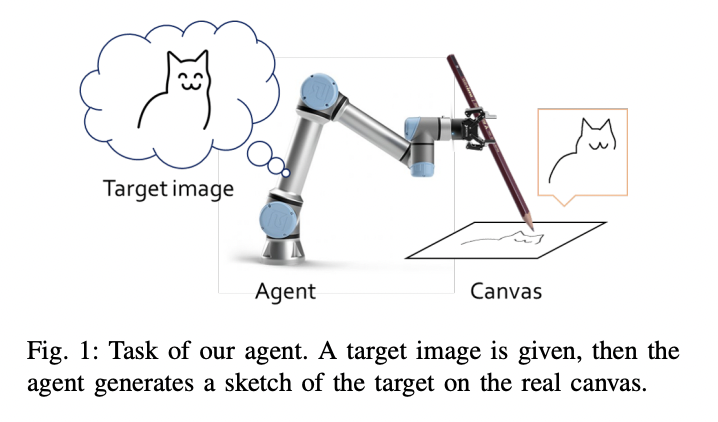

A new artistic deep learning framework has recently been unveiled, intended to improve a sketching robot’s capabilities. Their method, described in a paper presented at ICRA 2022 and pre-published on arXiv, enables a sketching robot to simultaneously learn motor control and stroke-based rendering.

The researcher developed a framework that displays drawing as a sequential choice process rather than creating a generative model that creates artistic works by generating certain pixel patterns. This methodical procedure is similar to how people would gradually build a sketch using individual lines drawn with a pen or pencil.

For a robotic sketching agent to create sketches in real-time while utilizing a genuine pen or pencil, the researchers planned to apply their architecture to it. While other teams have developed deep learning algorithms for “robot artists,” these models frequently required significant training datasets of sketches and drawings and inverse kinematic techniques to teach the robot to operate a pen and sketch with it.

On the other hand, there were no examples of actual drawings used to teach’ the framework. Instead, it can independently create its own sketching techniques over time by learning from mistakes.

Furthermore, the researcher claims that “their framework does not use inverse kinematics, which renders robot movements somewhat rigid; instead, it also lets the system build its own movement tricks to make movement style as organic as possible. In other words, in contrast to how most robotic systems typically operate, it directly moves its joints without the need for primitives.

Upper- and lower-class agents are two “virtual agents” that are part of the research team’s paradigm. The role of the upper-class agent is to pick up innovative drawing techniques, while the lower-class agent picks up efficient moving techniques.

Before being coupled and only after finishing their respective training, the two virtual agents underwent independent reinforcement learning training. The researcher put their combined performance to the test by utilizing a 6-DoF robotic arm with a 2D gripper in a series of actual tests. The findings of these initial tests were highly positive because the robotic agent was able to appropriately sketch specific photos by the algorithm.

This Article is written as a research summary article by Marktechpost Staff based on the research paper 'From Scratch to Sketch: Deep Decoupled Hierarchical Reinforcement Learning for Robotic Sketching Agent'. All Credit For This Research Goes To Researchers on This Project. Check out the paper and reference article. Please Don't Forget To Join Our ML Subreddit

![]()

I am consulting intern at MarktechPost. I am majoring in Mechanical Engineering at IIT Kanpur. My interest lies in the field of machining and Robotics. Besides, I have a keen interest in AI, ML, DL, and related areas. I am a tech enthusiast and passionate about new technologies and their real-life uses.

Credit: Source link

Comments are closed.