Deep learning is being widely used to solve data-analysis-related problems. These models require intensive training in physical data centers before they can be deployed in software and devices like cell phones. This is a time-consuming and energy-intensive process. New analog technology, like memristor arrays, may be more energy efficient. However, due to discrepancies between the analytically computed training information and the imprecision of actual analog devices, the popular backpropagation training methods are typically incompatible with such hardware.

New research by Texas A&M University, Rain Neuromorphics, and Sandia National Laboratories has developed a novel system for training deep learning models at scale and more efficiently. Using novel training algorithms and multitasking memristor crossbar hardware, the team has attempted to develop a method that would make training AI models cheaper and less taxing on the environment.

Their study addresses two major shortcomings of conventional approaches to AI training:

- The utilization of graphics processing units (GPUs) that aren’t optimized for running and training deep learning models is the first problem.

- Using inefficient and mathematically intensive software programs, particularly the backpropagation technique.

The backpropagation process involves extensive data movement and solving mathematical equations and is currently used in virtually all training. However, due to hardware noise, mistakes, and low precision, memristor crossbars are incompatible with standard backpropagation algorithms designed for high-precision digital electronics.

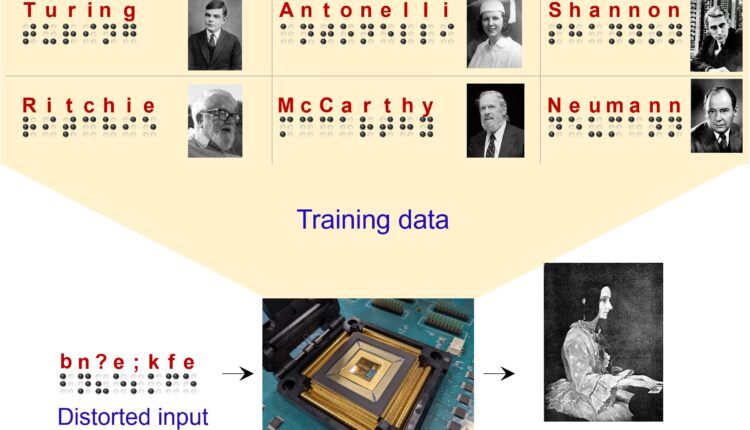

The proposed algorithm-hardware system investigates the variance in behavior of a neural network’s synthetic neurons across two regimes: one in which the network is free to generate any output and another in which the output is compelled to be the target pattern to be discovered.

The required weights to coax the system to the right conclusion can be predicted by analyzing the disparity in answers from the system. This allows for local training, which is how the brain acquires new skills and eliminates the need for the complex mathematical equations involved in backpropagation. Thanks to this, the energy-efficient implementation of AI in edge devices would otherwise necessitate large cloud servers, which consume enormous amounts of electrical power. This can potentially make large-scale deep-learning algorithm training more economically viable and sustainable.

Their findings show that compared to even the most powerful GPUs available today, this method can drastically cut the energy needed for AI training by as much as 100,000 times.

The team plans to investigate several learning algorithms for training deep neural networks that take inspiration from the brain and identify those that perform well over a range of networks and resource limitations. They believe that their work can pave the way for the consolidation of formerly disparate data centers onto individual users’ devices, cutting down on the energy consumption often involved with AI training and encouraging the creation of additional neural networks designed to aid or simplify common human tasks.

Check out the Paper and Reference Article. All Credit For This Research Goes To Researchers on This Project. Also, don’t forget to join our Reddit page and discord channel, where we share the latest AI research news, cool AI projects, and more.

![]()

Tanushree Shenwai is a consulting intern at MarktechPost. She is currently pursuing her B.Tech from the Indian Institute of Technology(IIT), Bhubaneswar. She is a Data Science enthusiast and has a keen interest in the scope of application of artificial intelligence in various fields. She is passionate about exploring the new advancements in technologies and their real-life application.

Credit: Source link

Comments are closed.