With millions of images and video content posted daily, visual filters have become an essential feature of social media platforms, allowing users to enhance and customize their video content with various effects and adjustments. These filters have revolutionized the way we communicate and share experiences, providing us with the ability to create visually appealing and engaging content that captures our audience’s attention.

Moreover, with the rise of AI, these filters have become even more sophisticated, allowing us to manipulate video content in previously impossible ways with just some clicks. AI-powered video filters can automatically adjust lighting, color balance, and other elements of a video, allowing creators to achieve a professional-quality look without the need for extensive technical knowledge.

Although very powerful, these filters are designed with pre-defined parameters, so they cannot generate consistent color styles for images with diverse appearances. Therefore, careful adjustments by the users are still necessary. To address this problem, color style transfer techniques have been introduced to automatically map the color style from a well-retouched image (i.e., the style image) to another (i.e., the input image).

Existing techniques, however, produce results affected by artifacts like color and texture inconsistencies and require a significant amount of time and resources to run. For this reason, a novel framework for color style transferring termed Neural Preset has been developed.

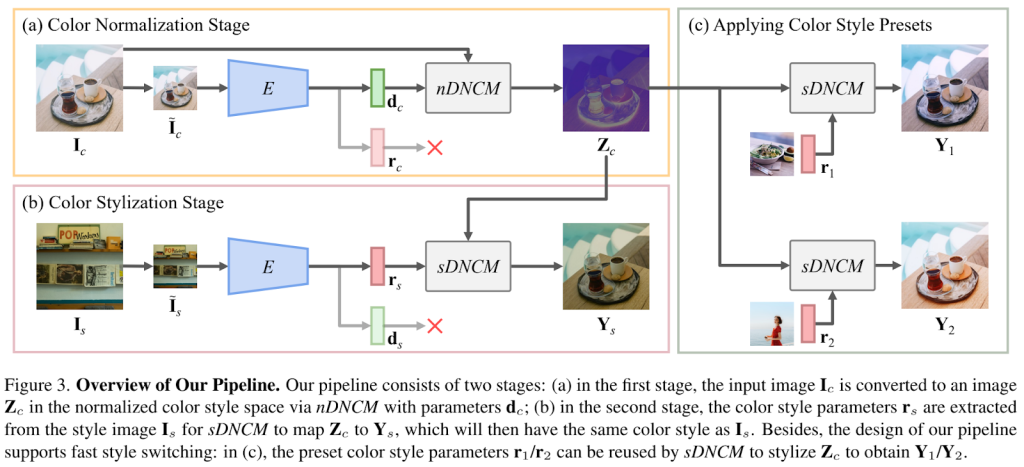

An overview of the workflow is depicted in the figure below.

The proposed method differs from the current state-of-the-art techniques, employing Deterministic Neural Color Mapping (DNCM) instead of convolutional models for color mapping. DNCM utilizes an image-adaptive color mapping matrix that multiplies the pixels of the same color to produce a specific color and effectively eliminates unrealistic artifacts. Additionally, DNCM functions independently on each pixel, requiring a small memory footprint and supporting high-resolution inputs. Unlike conventional 3D filters that rely on the regression of tens of thousands of parameters, DNCM can model arbitrary color mappings using only a few hundred learnable parameters.

Neural Preset works in two distinct stages, allowing for quick switching between different styles. The underlying structure relies on the encoder E, which predicts parameters employed in the normalization and stylization stages.

The first stage creates an nDNCM from the input image, normalizing the colors and mapping the image to a color-style space representing the content. The second stage builds an sDNCM from the style image, which stylizes the normalized image to the desired target color style. This design guarantees that the parameters of sDNCM can be saved as color style presets and utilized by different input images. Furthermore, the input image can be styled using a variety of color-style presets after being normalized with nDNCM.

A comparison of the proposed approach with the state-of-the-art techniques is presented below.

According to the authors, Neural Preset outperforms state-of-the-art methods significantly in various aspects, such as accurate results for 8K images, consistent color style transfer results across video frames, and ∼28× speedup on an Nvidia RTX3090 GPU, supporting real-time performances at 4K resolution.

This was the summary of Neural Preset, an AI framework for real-time and color-consistent high-quality style transfer.

If you are interested or want to learn more about this work, you can find a link to the paper and the project page.

Check out the Paper and Project. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 16k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

![]()

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He is currently working in the Christian Doppler Laboratory ATHENA and his research interests include adaptive video streaming, immersive media, machine learning, and QoS/QoE evaluation.

Credit: Source link

Comments are closed.