|

Listen to this article  |

Maturi Jagadish (Jag) is the Director of Product Management for Brain Corp. We sat down recently for a conversation about the current state of product development at Brain Corp, and a look at the future roadmap.

Editor’s note: This interview with Jag was edited for brevity and clarity.

Mike Oitzman: Tell us about the Brain Corp modular robot concept. The big question is: is Brain Corp moving into applications beyond floor cleaning?

Jag: Just to give a little bit of a historical context, our go-to-market approach from an autonomy point of view has always been through OEM partnerships. That’s been our primary strategy for how we have built robots and go to market. A few years ago, we partnered with companies like Tennant and Nilfisk for floor cleaning, and those are the types of companies that are experts in that specific application.

We partner with OEMs using what we call an autonomy kit. The autonomy kit essentially is all the pieces that help the OEM manufacturer build their manual machine into a robotic application.

That’s how we started the journey. But as markets move on, robotic adoption is moving, and the expectations from the customers are starting to grow as well. Previously, having a dual-purpose machine was okay, enabling users to do some tasks manually, and some tasks robotically.

As we grow into it, the autonomy expectations on how these machines need to help humans to get their job done are growing higher and higher. So they want to be more autonomous, and users want less manual usage and more autonomous usage.

It’s kind of a paradigm shift that we are starting to notice over the last few years in the expectations of the customer journey for robotics, as well as autonomy. When we look at the technology we have, I think we made significant progress with sensor technologies including 2D LiDAR. The purpose is, okay, this is a specific application that gets the job done in this specific area. So, when we think about purposeful robotics, and we see autonomy expectations growing, we see a need for evolving our generation of technology into the next generation of technology, with that idea in the mind of zero-touch operations, or zero assist autonomy for the end user.

When we move, we think about that higher-level vision for autonomy, and we start to see that we need to evolve with some pieces of the core navigation tech and mapping tech into the next-generation building blocks. When we say modular robotics, the core of the building blocks is the same. Your navigation is the same, your controller is the same, and your cameras are the same. But the application of how we apply into a form factor will start to vary.

For example, if I step back and take a higher-level view, most of the robots are either a rectangle or round shape. Thinking about it from the form factor point of view, how do we adapt? Where do I put my sensor? Where do I put my cameras for that specific application? Building those building blocks, and enabling the tools that are needed will help modular robotics go from one application to the next application should not be as challenging.

When we think about modular robotics, that’s the idea. Enabling our customers to get to the market quickly for their specific application. We will be the platform guys that will give them all the building blocks needed.

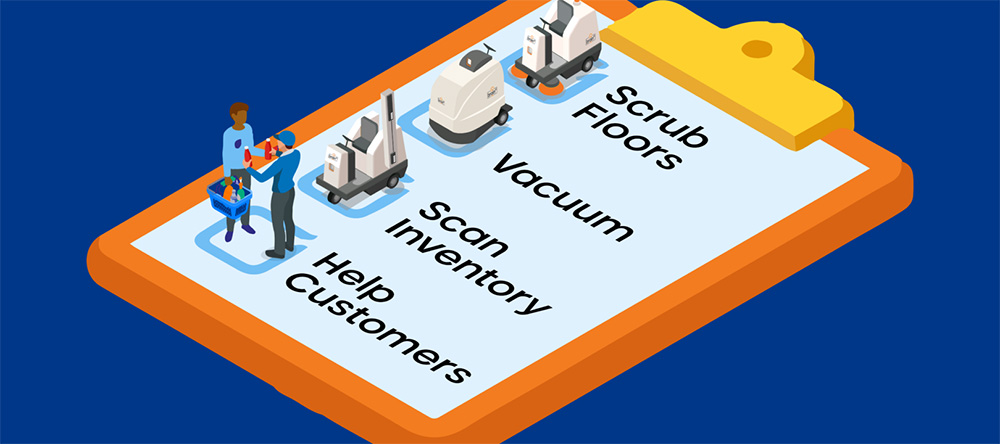

Brain Corp’s strategy is to build a modular perception and control package to fit onto any OEM vehicle. | Credit: Brain Corp

I see that Brain Corp wants to build more modularity into the fit and function so that it can retrofit a broader range of OEM systems. Are you now thinking about building a piece parts kit that can be the basis for a fully built system from the ground up rather than a fully designed engineered system with your OEM partners?

Yes, definitely. In order to help OEMs be quicker to market, we are building the autonomy components. And we are also building what we call reference designs. The reference design is essentially a piece of commercial-looking equipment but not fully commercial. It tells you the idea of what the product should be, and essentially, this gives you a starting point on what that would look like. Instead of taking our core specifications and starting to put them together, you already have a mechanical form factor and the idea.

That can take you to the application pretty quickly. One of the reference designs we announced recently at the NRF is purpose-built robotics for a shelf-scanning application. That’s built based on our Gen Three autonomy system. It’s essentially the core of the technology and the mapping. The navigation is built around a hemispherical 3D LiDAR, compared to a 2D LiDAR, which is essentially what we have been using on the generation two systems.

That’s your type of upgrade, you’re going from a two-dimensional LiDAR to a three-dimensional LiDAR, and the data that comes out of the LiDARs is far richer. That enables us to have much more precise navigation, precise mapping of the environment, and the ability to navigate within the space, like rerouting around the objects. As with all of the core functionality, people take it for granted when they talk about applications, but a lot of the autonomy pieces are starting to become commonplace. We are trying to make sure that we are moving that core functionality and autonomy system to the next level.

With the next generation of Brain Corp software, can you configure a system with only vision and no LiDAR sensor on the vehicle sensor package?

I think most of our generation three is banking on LiDAR being present in the autonomous system. We are working towards a visual-based system and still feel that a combination of your LiDAR and the camera will still be predominantly what will be dominant in the market for the next few years. We shouldn’t dismiss them. Of course, both of them have their own pros and cons. But I think with the combination of the richness of the data we’re getting from 3D LiDAR and 2D RGB cameras that we have within our autonomy system, we will use sensor fusion to do all of this. And with that sensor fusion, I think we could generate much more superior navigation and mapping technology that’s built for specific applications.

So, either in retail or commercial spaces, going to an industrial space is not a big leap. When we take this technology into big open spaces, like an industrial space so that robots don’t get lost, the accuracy will be there, and the localization of aspects as well because LIDAR was able to see the ceiling. Right now we have the 3D LIDAR that can see the whole space, utilizing all of that data, I think we will be a lot more accurate and localized within that space and not get lost as much as some of the 2D LIDAR challenges were. It’s a technology challenge for everyone.

Do you think of this as a kit of parts that can be reconfigured as necessary for whatever use case comes?

I think providing the reference design with the built-up of those parts will take the OEM journey along much further in getting the market fit as close as possible. I think that, for example, the purpose-built shelf-scanning robot we launched at NRF is a reference design. It’s essentially a two-piece design. We are partnering with Google for the vision processing of the shelf imaging.

So why start with a reference design instead of releasing a full product?

We took all the components and the autonomy kit, and we quickly built a reference design. We are working with OEM partners to take to this market in the next step. But with the time needed for this fully built-up or close-to-built-up reference design to become a commercial product, the amount of effort an OEM needs to put in just becomes smaller and smaller.

That’s the idea behind that. But if you think about it, how do we build these gen three systems? Imagine that you don’t have the tower, you just have the base with the LiDAR, we could essentially configure that with shelves or some sort of bins. Very quicker it could become a warehouse logistics type of application. So that adjacency of the markets we can address with this base and an application type of architecture with a reference design just becomes that much more configurable or modular from an application point of view. We want to make it easier to go from: I don’t know anything about robotics but I know everything about the application to a functional system. Take all the stuff you need, then build something on top of that to get to the market quickly. That’s the idea behind our approach to our generation three architecture.

Brain Corp built up one of the largest fleets of autonomous floor scrubbers through its partnership with OEM providers like Tennant. | Credit: Brain Corp

Are you looking at the short to the medium-term roadmap, for indoor sort of retail-related applications? And then expanding into warehouse and logistics but still indoor mobile robotics applications?

Yeah, I think the core is indoor and the retail and commercial spaces are our core focus right now. The retail analytics market itself is huge. We are taking a very methodical approach to what verticals we go after. We started with floor care and now we are starting to go into retail and the analytics space.

Think of this as: “Can we take this architecture into an eCommerce, tech application, or a small parts movement application in the factory?” I think that’s not a big deal like we need some software development for custom applications within that space. But that’s not a big leap of faith going from one from the market to this one. But we are very focused on the retail and commercial space for now. We methodically think as the opportunities arise, because we have any organization, engineering, resources, and opportunities for us, but the engineering resources are what we can tap into so we need to create a focus for the organization.

Brain Corp has one of the largest deployed fleets of floor scrubbers in the world. I know you’ve learned a lot over the last five years about that market. You’ve evolved the solution to support this large fleet, and hopefully, you can deliver it cheaper than other competitors in this market.

Yes, I think about any technology industry. The sensors and the controllers and the components needed to start to get the price curve down over time. I think we see competition coming in this space as well. We think the opportunity is there for us to lead and solve this critical use case where retailers, where labor shortages are becoming more challenging to find and inherently like the tasks and time needed for the retailers to capture this data and be on top of it, is becoming more and more challenging. And as a typical human gets more errors, you have a workforce that’s constantly changing. So getting them tuned up to the new processes, and repeatability of the same task. Robotics will help to document the retail store information they need on hand to solve those problems.

For us, inventory management is the first application that we are going after in generation three. Similarly, adjacent applications that we can think of are applications like security robots.

The next sort of application that I think is important to retailers is delivery robots. You’ve got a variety of robots, like Ottonomy, which are focusing on curbside delivery, indoor-outdoor, taking items from pickers and delivering them to the curbside, or taking the order a short distance away and then coming back to the store. This is one application that would I think, be a potential for your technology as well.

In the future, it’s a possibility. I think we just need to be really careful crossing into purely outdoor applications. The curbside is in the border case, right? You’re still kind of indoor, not fully outdoor. When you go into the outdoor space, the regulation starts to creep in on the safety standards and is that much more elevated when you’re operating outdoors. So that’s why it’s crucial that we build up the technology and the expertise to calculate that the opportunity within the indoor world is so much. We need to tackle that first before we jump into any outdoor application.

There’s certainly a lot to be said about Brain Corp’s dominance in the autonomous floor-scrubbing market. There’s an argument that having a single technology, regardless of the application, gives you the economy of scale, as opposed to having an autonomous floor scrubber from company A, a shelf scanning robot from company B and a security robot from company C.

Yeah, that’s why we currently offer a multipurpose option on our current floor scrubbers. We have a sensor tower that could be built on top of the existing floor care solutions. So that will be multi-purpose and double-duty. We can do floor care and we could do shelf scanning at the same time. We are trying to solve this problem by asking “Okay, what are the customer’s needs?” If a customer has needs for both scanning, and floor care from a retail point of view, and they want to use one robot, we have a solution for that. We are trying to be mindful of building our portfolio so that we address the customer needs at different scales.

Brain Corp recently released the generation three software. Are you upgrading all of your existing fleets to this new generation software or are they going to stay gen two (in the field), and only new systems will get deployed with gen three? When I think about the RaaS business model, you can push software features down to all the endpoints, but you can’t necessarily upgrade all of the units to a new sensor configuration.

Very good question. With our generation two fleet, we still feel that these units will coexist in the field for a long time, we are not planning to upgrade existing deployed units in the field to gen three. But for example, when the same OEM customer, for example, Tennant, wants to build a new robot system to replace the existing system, they will start to use generation three in those new products.

Was the new shelf scanning technology that you showed at NRF just a reference design, or are you bringing that directly to the market?

We are working with a couple of hardware OEM partners to commercialize that reference design.

Let’s say a customer comes to you because they want to use Brain Corp software, but they haven’t put an autonomous system together yet. They don’t have an OEM platform yet and they want to start from the ground up and want to build on your technology. Is that an option that you would pursue?

Yes, these are the core building blocks we will provide them. We prefer to collaborate with the customer closely or the OEM partner closely on the market they’re trying to go after work with them to see we have all the pieces of the technology needed to capture that market. We are open to new customers. The automated inventory management space is a good example of adjacent applications to floor scrubbing that can be covered with a single platform.

But this does not stop us from entertaining new customers in terms of if they have new ideas for new applications. So this is the platform we are trying to promote and here we are working with our manufacturing partner, and with Google Cloud for all of the analytics. It’s allowing us to create a more end-to-end solution for the retailer. So capturing the data, then sharing the insights. And so that delivers a better solution for the retailer than previously has really been available to them.

Credit: Source link

Comments are closed.