Every third Thursday of May, the world commemorates Global Accessibility Awareness Day or GAAD. And as has become customary in the last few years, major tech companies are taking this week as a chance to share their latest accessibility-minded products. From Apple and Google to Webex and Adobe, the industry’s biggest players have launched new features to make their products easier to use. Here’s a quick roundup of this week’s GAAD news.

Apple’s launches and updates

First up: Apple. The company actually had a huge set of updates to share, which makes sense since it typically releases most of its accessibility-centric news at this time each year. For 2023, Apple is introducing Assistive Access, which is an accessibility setting that, when turned on, changes the home screen for iPhone and iPad to a layout with fewer distractions and icons. You can choose from a row-based or grid-based layout, and the latter would result in a 2×3 arrangement of large icons. You can decide what these are, and most of Apple’s first-party apps can be used here.

The icons themselves are larger than usual, featuring high contrast labels making them more readable. When you tap into an app, a back button appears at the bottom for easier navigation. Assistive Access also includes a new Calls app that combines Phone and FaceTime features into one customized experience. Messages, Camera, Photos and Music have also been tweaked for the simpler interface and they all feature high contrast buttons, large text labels and tools that, according to Apple, “help trusted supporters tailor the experience for the individual they’re supporting.” The goal is to offer a less-distracting or confusing system to those who may find the typical iOS interface overwhelming.

Apple also launched Live Speech this week, which works on iPhone, iPad and Mac. It will allow users to type what they want to say and have the device read it aloud. It not only works for in-person conversations, but for Phone and FaceTime calls as well. You’ll also be able to create shortcuts for phrases you frequently use, like “Hi, can I get a tall vanilla latte?” or “Excuse me, where is the bathroom?” The company also introduced Personal Voice, which lets you create a digital voice that sounds like yours. This could be helpful for those at risk of losing their ability to speak due to conditions that could impact their voice. The setup process includes “reading alongside randomized text prompts for about 15 minutes on iPhone or iPad.”

For those with visual impairments, Apple is adding a new Point and Speak feature to the detection mode in Magnifier. This will use an iPhone or iPad’s camera, LiDAR scanner and on-device machine learning to understand where a person has positioned their finger and scan the target area for words, before reading them out for the user. For instance, if you hold up your phone and point at different parts on a microwave or washing machine’s controls, the system will say what the labels are — like “Add 30 seconds,” “Defrost” or “Start.”

The company made a slew of other smaller announcements this week, including updates that allow Macs to pair directly with Made-for-iPhone hearing devices, as well as phonetic suggestions for text editing in voice typing.

Google’s new accessibility tools

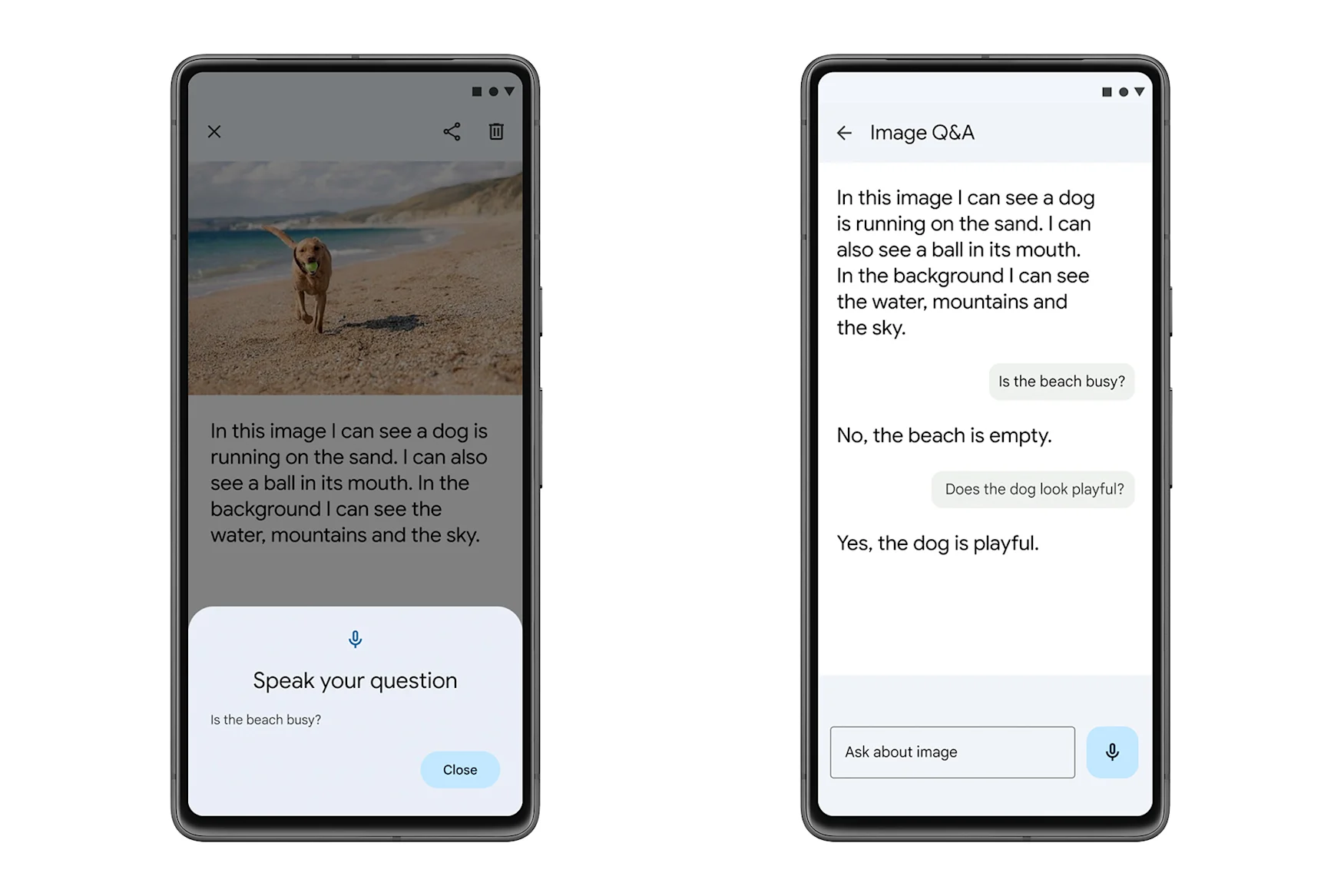

Meanwhile, Google is introducing a new Visual Question and Answer (or VQA) tool in the Lookout app, which uses AI to answer follow-up questions about images. The company’s accessibility lead and senior director of Products For All Eve Andersson told Engadget in an interview that VQA is the result of a collaboration between the inclusion and DeepMind teams.

To use VQA, you’ll open Lookout and start the Images mode to scan a picture. After the app tells you what’s in the scene, you can ask follow-ups to glean more detail. For example, if Lookout said the image depicts a family having a picnic, you can ask what time of day it is or whether there are trees around them. This lets the user determine how much information they want from a picture, instead of being constrained to an initial description.

Often, it is tricky to figure out how much detail to include in an image description, since you want to provide enough to be helpful but not so much that you overwhelm the user. For example, “What’s the right amount of detail to give to our users in Lookout?” Andersson said. “You never actually know what they want.” Andersson added that AI can help determine the context of why someone is asking for a description or more information and deliver the appropriate info.

When it launches in the fall, VQA can present a way for the user to decide when to ask for more and when they’ve learned enough. Of course, since it’s powered by AI, the generated data might not be accurate, so there’s no guarantee this tool works perfectly, but it’s an interesting approach that puts power in users’ hands.

Google is also expanding Live Captions to work in French, Italian and German later this year, as well as bringing the wheelchair-friendly labels for places in Maps to more people around the world.

Microsoft, Samsung, Adobe and more

Plenty more companies had news to share this week, including Adobe, which is rolling out a feature that uses AI to automate the process of generating tags for PDFs that would make them friendlier for screen readers. This uses Adobe’s Sensei AI, and will also indicate the correct reading order. Since this could really speed up the process of tagging PDFs, people and organizations could potentially use the tool to go through stockpiles of old documents to make them more accessible. Adobe is also launching a PDF Accessibility Checker to “enable large organizations to quickly and efficiently evaluate the accessibility of existing PDFs at scale.”

Microsoft also had some small updates to share, specifically around Xbox. It’s added new accessibility settings to the Xbox app on PC, including options to disable background images and disable animations, so users can reduce potentially disruptive, confusing or triggering components. The company also expanded its support pages and added accessibility filters to its web store to make it easier to find optimized games.

Meanwhile, Samsung announced this week that it’s adding two new levels of ambient sound settings to the Galaxy Buds 2 Pro, which brings the total number of options to five. This would let those who use the earbuds to listen to their environment get greater control over how loud they want the sounds to be. They’ll also be able to select different settings for individual ears, as well as choose the levels of clarity and create customized profiles for their hearing.

We also learned that Cisco, the company behind the Webex video conferencing software, is teaming up with speech recognition company VoiceITT to add transcriptions that better support people with non-standard speech. This builds on Webex’s existing live translation feature, and uses VoiceITT’s AI to familiarize itself with a person’s speech patterns to better understand what they want to communicate. Then, it’ll establish and transcribe what is said, and the captions will appear in a chat bar during calls.

Finally, we also saw Mozilla announce that Firefox 113 would be more accessible by enhancing the screen reader experience, while Netflix revealed a sizzle reel showcasing some of its latest assistive features and developments over the past year. In its announcement, Netflix said that while it has “made strides in accessibility, [it knows] there is always more work to be done.”

That sentiment is true not just for Netflix, nor the tech industry alone, but also for the entire world. While it’s nice to see so many companies take the opportunity this week to release and highlight accessibility-minded features, it’s important to remember that inclusive design should not and cannot be a once-a-year effort. I was also glad to see that despite the current fervor around generative AI, most companies did not appear to stuff the buzzword into every assistive feature or announcement this week for no good reason. For example, Andersson said “we’re typically thinking about user needs” and adopting a problem-first approach as opposed to focusing on determining where a type of technology can be applied to a solution.

While it’s probably at least partially true that announcements around GAAD are a bit of a PR and marketing game, ultimately some of the tools launched today can actually improve the lives of people with disabilities or different needs. I call that a net win.

All products recommended by Engadget are selected by our editorial team, independent of our parent company. Some of our stories include affiliate links. If you buy something through one of these links, we may earn an affiliate commission. All prices are correct at the time of publishing.

Credit: Source link

Comments are closed.