Chinese AI startup DeepSeek AI has ushered in a new era in large language models (LLMs) by debuting the DeepSeek LLM family. Comprising the DeepSeek LLM 7B/67B Base and DeepSeek LLM 7B/67B Chat – these open-source models mark a notable stride forward in language comprehension and versatile application.

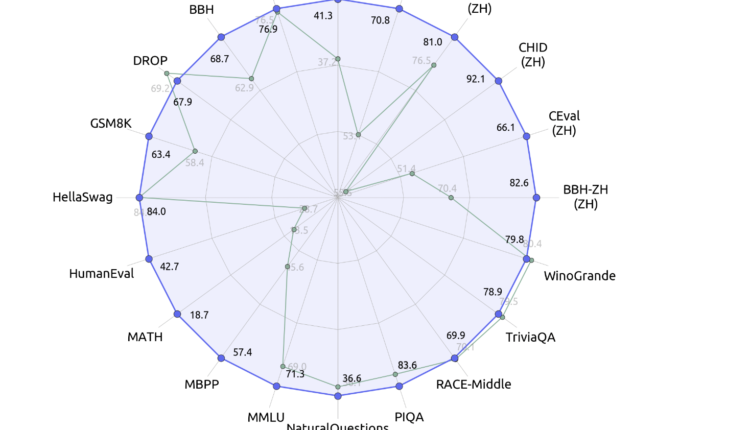

One of the standout features of DeepSeek’s LLMs is the 67B Base version’s exceptional performance compared to the Llama2 70B Base, showcasing superior capabilities in reasoning, coding, mathematics, and Chinese comprehension.

This qualitative leap in the capabilities of DeepSeek LLMs demonstrates their proficiency across a wide array of applications. Particularly noteworthy is the achievement of DeepSeek Chat, which obtained an impressive 73.78% pass rate on the HumanEval coding benchmark, surpassing models of similar size. It exhibited remarkable prowess by scoring 84.1% on the GSM8K mathematics dataset without fine-tuning.

DeepSeek AI’s decision to open-source both the 7 billion and 67 billion parameter versions of its models, including base and specialized chat variants, aims to foster widespread AI research and commercial applications.

To ensure unbiased and thorough performance assessments, DeepSeek AI designed new problem sets, such as the Hungarian National High-School Exam and Google’s instruction following the evaluation dataset. These evaluations effectively highlighted the model’s exceptional capabilities in handling previously unseen exams and tasks.

The startup provided insights into its meticulous data collection and training process, which focused on enhancing diversity and originality while respecting intellectual property rights. The multi-step pipeline involved curating quality text, mathematical formulations, code, literary works, and various data types, implementing filters to eliminate toxicity and duplicate content.

DeepSeek’s language models, designed with architectures akin to LLaMA, underwent rigorous pre-training. The 7B model utilized Multi-Head attention, while the 67B model leveraged Grouped-Query Attention. The training regimen employed large batch sizes and a multi-step learning rate schedule, ensuring robust and efficient learning capabilities.

By spearheading the release of these state-of-the-art open-source LLMs, DeepSeek AI has marked a pivotal milestone in language understanding and AI accessibility, fostering innovation and broader applications in the field.

Niharika is a Technical consulting intern at Marktechpost. She is a third year undergraduate, currently pursuing her B.Tech from Indian Institute of Technology(IIT), Kharagpur. She is a highly enthusiastic individual with a keen interest in Machine learning, Data science and AI and an avid reader of the latest developments in these fields.

Credit: Source link

Comments are closed.