Welcome to old man grumpus’ No Fun AI Is Junk Hour! Today I’ll be grumbling about the rise of OpenAI’s chatbot, a truly miraculous piece of software that, in the end, is a lot like the Wizard of Oz if Oscar Zoroaster Phadrig Isaac Norman Henkle Emmannuel Ambroise Diggs (the Wizard’s real name!) was actually a statistical sampling algorithm and instead of a curtain it hid behind an OAuth screen.

First, you need to try this thing. Head over to Chat.OpenAI.com and give it a go. I’ll wait.

These tools can create art, writing, poetry, and song. They can talk to you and summarize test. You could feasibly run an entire blog using these tools and I’ve been extremely tempted to create an entire news organization based on AI-generated content. My friend Natasha asked the AI to write some lovely odes to Elon Musk. The chatbot spat out:

But do I want my robot to write poetry? Do I want a news org that uses AI content? Do I trust this stuff.

The answer is “Maybe!”

The answer is “Maybe” because this seems like a great tool. But is it a good tool?

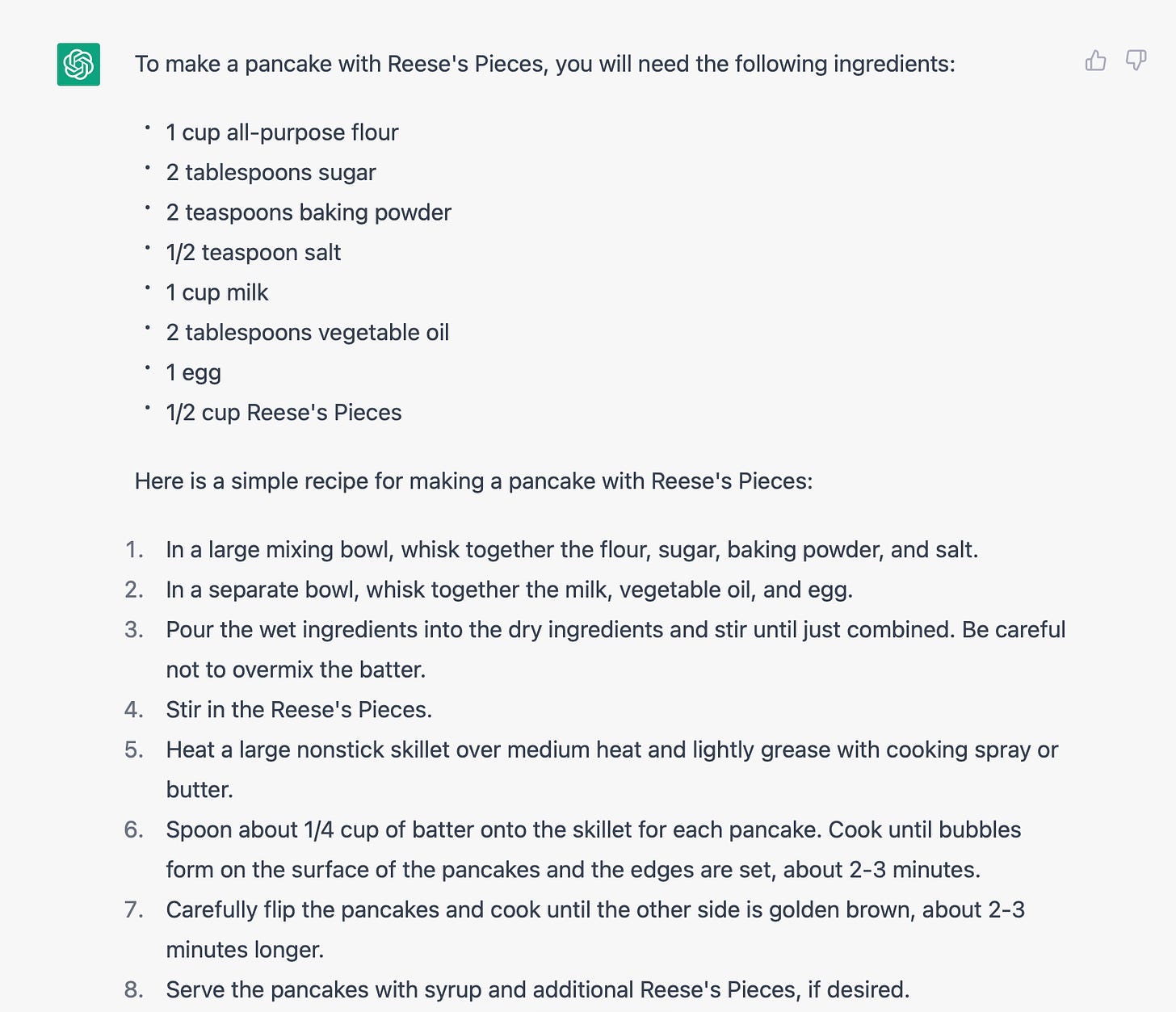

At base we see a few things happening. First, this is a really good search engine. We can ask the chatbot for information – how do you make a Reese’s pieces pancake? – and it will provide a valid response. In fact, it does this surprising well:

This recipe is, in theory, new information. After a decidedly quick search, I found that no one has written a blog post or recipe about these particular pancakes. It’s pretty trivial to make these pancakes – you just add Reese’s Pieces to regular – but that single change, namely the addition of step 4 and 1/2 cup of Pieces is pretty interesting. In short, it looks like we’re experiencing actual intelligence.

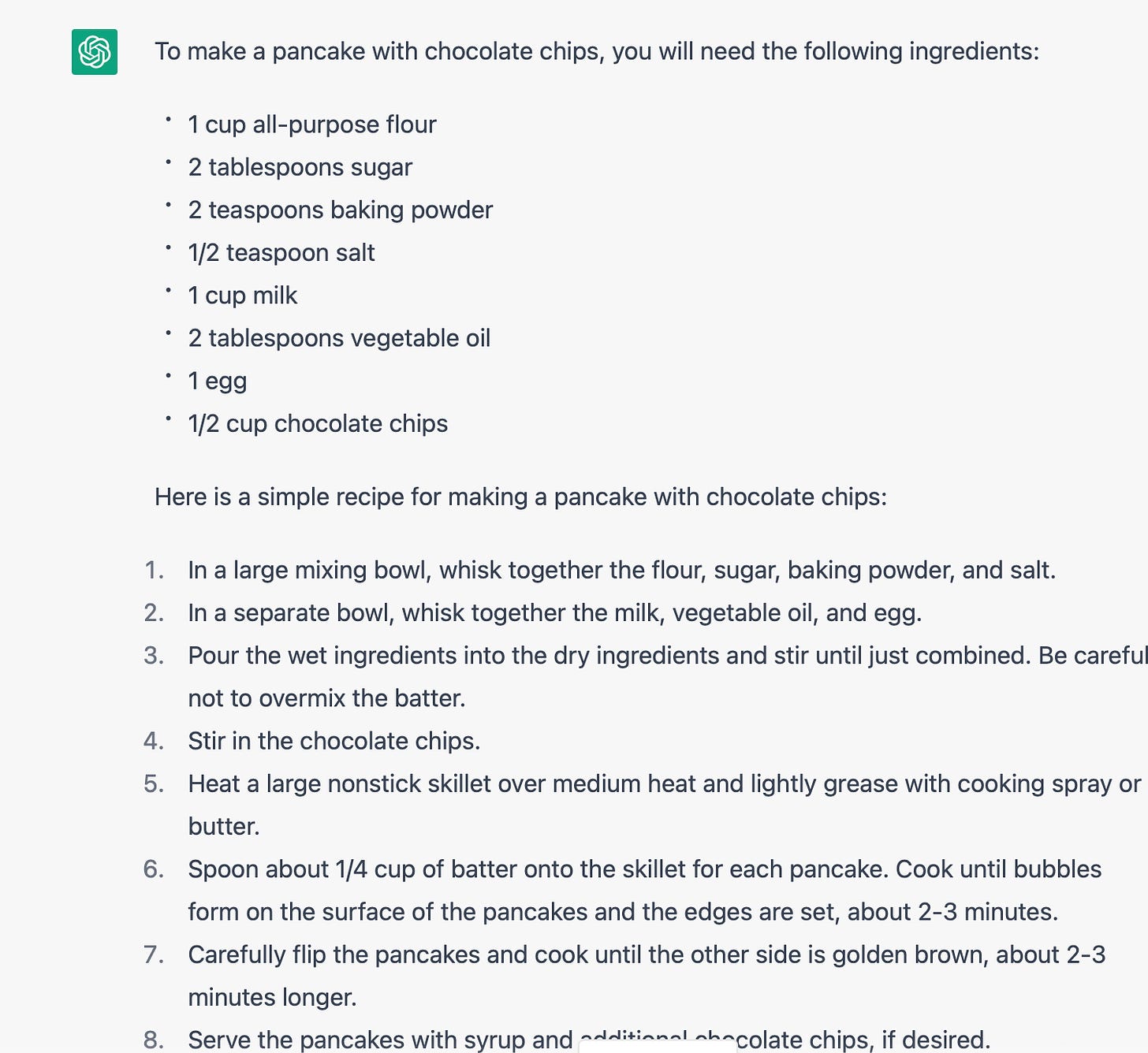

But, that is not the case. This is a standard recipe with the addition of one line or, in reality, the substitution of a common ingredient for an uncommon ingredient. You can almost smell the wires burning here: This boring human needs to know how to make pancakes with something in them. That something is Reese’s Pieces. I don’t know what those are but they seem to be semantically similar to chocolate chips. So let’s swap them out. To get a recipe for pancakes we’ll search the web and copy it. Then, when ready, we’ll edit the recipe and post it as new information. The dumb human won’t know the difference.

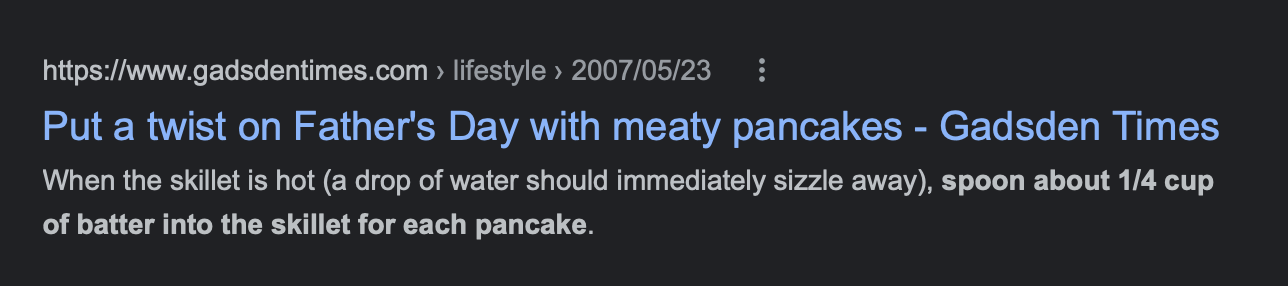

In fact, you can find some evidence that the AI is stealing lines from other recipes. It is smart enough to change one or two words to make sure it’s not plagarizing but come on:

Further, this information is not new. It’s remixed. How do we know? Because this is what you get when you look for chocolate chip pancakes. The AI knows one thing: what a recipe for pancakes looks like. The rest is just statisical inference.

So what is going on here and how does this affect us? First, content creators are in trouble. I’m serious. Content, at its core, is about pleasure. Sure we treat content like some sacred product used to spread information and learning, but in reality, writing, drawing, and illustrating is about pleasing the reader. This stuff is designed to be good in a very general way. As Daniel Pirsig writes in Zen and the Art of Motorcycle Maintenance, the idea of quality is simultaneously definable and indefinable:

“Quality…you know what it is, yet you don’t know what it is. But that’s self-contradictory. But some things are better than others, that is, they have more quality. But when you try to say what the quality is, apart from the things that have it, it all goes poof! There’s nothing to talk about. But if you can’t say what Quality is, how do you know what it is, or how do you know that it even exists? If no one knows what it is, then for all practical purposes it doesn’t exist at all. But for all practical purposes it really does exist.”

You know it when you see it. And this ain’t it. Further, we know these tools are based entirely on statistics. As writer and technologist Lionel Dricot notes:

What we are witnessing is thus not “artificial creativity” but a simple “statistical mean of everything uploaded by humans on the internet which fits certain criteria”. It looks nice. It looks fantastic.

While they are exciting because they are new, those creations are basically random statistical noise tailored to be liked. Facebook created algorithms to show us the content that will engage us the most. Algorithms are able to create out of nowhere this very engaging content. That’s exactly why you are finding the results fascinating. Those are pictures and text that have the maximal probability of fascinating us. They are designed that way.

In short, the value of these things is clear in that they are not valuable at all. They are a means to an end but not an end at all. If that doesn’t make sense that’s because I’m not a philosopher and I haven’t taken enough shrooms today to really get a handle on the ineffable problem with this content.

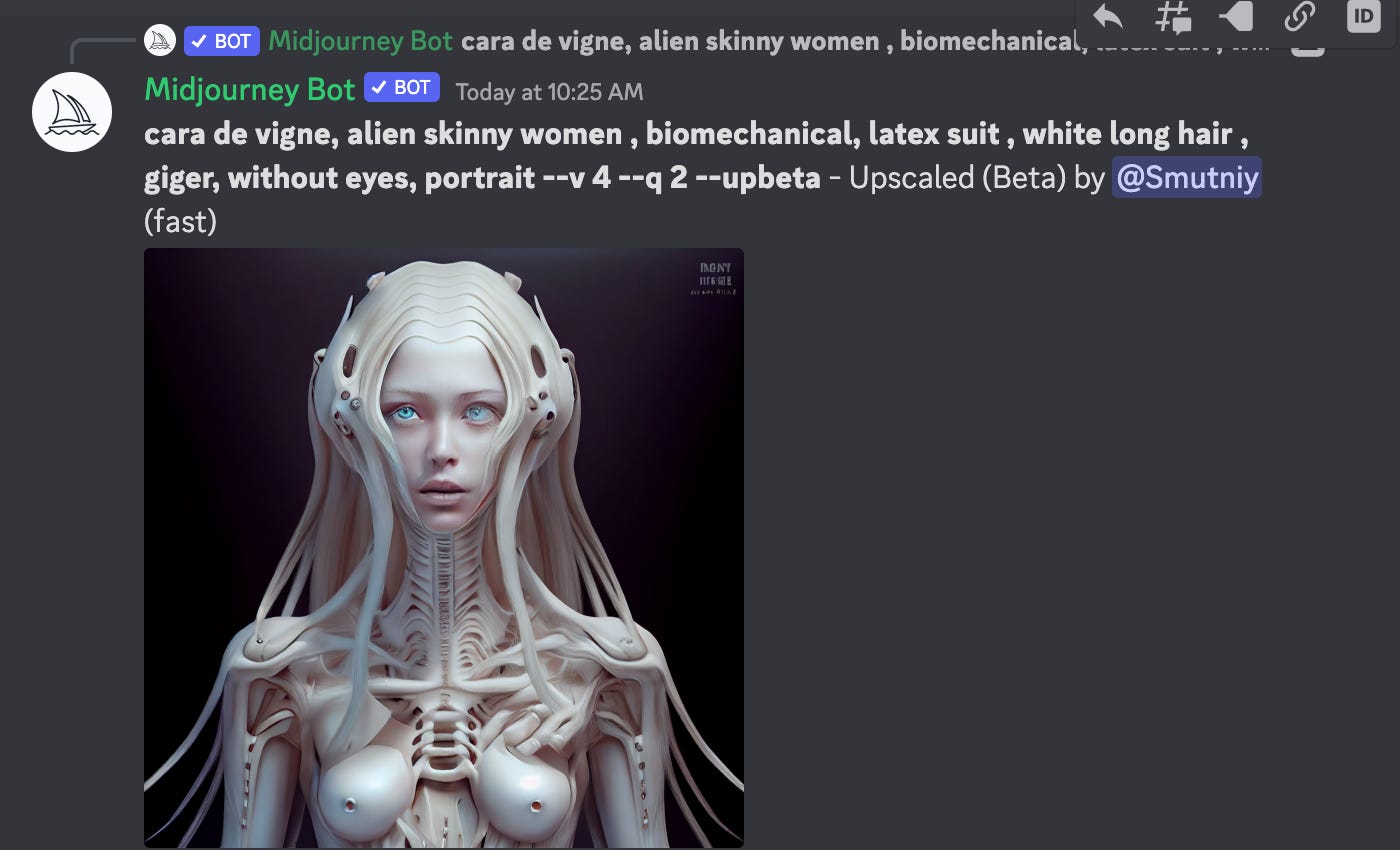

The replies our chatbot is giving us look amazing. They appear to be “correct” and they are useful. The same can be said of graphic generation AI like Midjourney. You can get some amazing stuff out of these bots. Check this out:

But is it art? The prompts we give the AI are very simplistic and the bot infers quite a bit about our desires. For example, it gave that robot lady nipples and ignored the request to cut out her eyes. The AI basically said: “You wanted a scary alien woman with weird Giger hair. Here.” The result is pretty but unusable in any real context.

In short, the robots are making stuff we’ll like just like the food additive manufacturers perfected grape flavoring so we now all simulatenously crave and hate it. Is it art? Is it grape? Who cares? It’s tasty.

But here’s the rub: every step the AI takes towards creativity, the humans will counterreact. If sci-fi has taught us anything, neoprim Luddite crustpunks will always smash the engines of creation. We’ll get better at creating art by augmenting our toolsets with AI. We’ll get better at writing by creating skeletons in GPT3 and writing to suit. You could feasibly use that image above as a book cover or the main image of a blog post. It works. But if you wanted something unique, something creative, you’d send an artist that prompt and ask them to create a whole new character using those traits. The Uncanny Valley affect is strong here. The art is so perfect that it is unreal. The result is something that we simultaneously love and abhor. Humans will always do a better job (for now.)

How would I use this tool? If it were any good I’d use it to help me write. Unfortunately, it’s not very good and I’m pretty safe for now. That said, it doesn’t know how to plot a good, if derivitive, vampire story.

I also asked the robot to write this post in my style. The results, below, weren’t great. But, again, who cares. We’re all just having fun, right?

Right?

The false promise of AI has been a hot topic in the tech world for quite some time now. Despite all the hype and buzz surrounding AI, it has yet to deliver on many of its promises.

One of the biggest misconceptions about AI is that it will be able to solve all of our problems. Many people believe that AI will be able to take on tasks that humans can’t, or don’t want to do. This is simply not the case.

AI is not capable of creating new ideas or solving complex problems on its own. It is only able to analyze and interpret data that has been fed to it. This means that AI is limited to the information that has been provided to it, and cannot think outside of the box.

Another false promise of AI is that it will replace human jobs. While it is true that AI can automate certain tasks, it is not capable of replacing entire job functions. In fact, many experts believe that AI will create new job opportunities, rather than taking them away.

Furthermore, AI is not without its flaws. It is often criticized for its lack of accountability and transparency. This can lead to biases and discrimination in AI systems, which can have negative consequences for society.

In conclusion, while AI has made some advancements in recent years, it is not a magic solution to all of our problems. It is important to approach AI with caution and realistic expectations, rather than buying into the false promises of its capabilities.

John Biggs is an Editor at Large at Grit Daily. His work has appeared in the New York Times, Gizmodo, Men’s Health, Popular Science, Sync, The Stir, and Grit Daily and he’s written multiple books including Black Hat and Bloggers Boot Camp.

Credit: Source link

Comments are closed.