Large Language Models (LLMs) are currently one of the most discussed topics in mainstream AI. Developers worldwide are exploring the potential applications of LLMs. These models are AI algorithms that utilize deep learning techniques and vast amounts of training data to understand, summarize, predict, and generate a wide range of content, including text, audio, images, videos, and more.

Large language models are intricate AI algorithms. Developing such a model is an exhaustive task, and constructing an application that harnesses the capabilities of an LLM is equally challenging. It demands significant expertise, effort, and resources to design, implement, and ultimately optimize a workflow capable of tapping into the full potential of a large language model to yield the best results. Given the extensive time and resources required to establish workflows for applications that utilize the power of LLMs, automating these processes holds immense value. This is particularly true as workflows are anticipated to become even more complex in the near future, with developers crafting increasingly sophisticated LLM-based applications. Additionally, the design space necessary for these workflows is both intricate and expansive, further elevating the challenges of crafting an optimal, robust workflow that meets performance expectations.

AutoGen is a framework developed by the team at Microsoft that aims to simplify the orchestration and optimization of the LLM workflows by introducing automation to the workflow pipeline. The AutoGen framework offers conversable and customizable agents that leverage the power of advanced LLMs like GPT-3 and GPT-4, and at the same time, addressing their current limitations by integrating the LLMs with tools & human inputs by using automated chats to initiate conversations between multiple agents.

When using the AutoGen framework, all it takes is two steps when developing a complex multi-agent conversation system.

Step 1: Define a set of agents, each with its roles and capabilities.

Step 2: Define the interaction behavior between agents i.e an agent should know what to reply when it receives a message from another agent.

Both of the above steps are modular & intuitive that makes these agents composable and reusable. The figure below demonstrates a sample workflow that addresses code based question answering in the optimization of the supply chain. As it can be seen, the writer first writes the code and interpretation, the Safeguard ensures the privacy & safety of the code, and the code is then executed by the Commander after it received the required clearance. If the system encounters any issue during the runtime, the process is repeated until it is resolved completely. Deploying the below framework results in reducing the amount of manual interaction from 3x to 10x when deployed in applications like optimization of the supply chain. Furthermore, the use of AutoGen also reduces the amount of coding effort by up to four times.

AutoGen might be a game changer as it aims to transform the development process of complex applications leveraging the power of LLMs. The use of AutoGen can not only reduce the amount of manual interactions needed to achieve the desired results, but it can also reduce the amount of coding efforts needed to create such complex applications. The use of AutoGen for creating LLM-based applications can not only speed up the process significantly, but it will also help in reducing the amount of time, effort, and resources needed to develop these complex applications.

In this article, we will be taking a deeper dive into the AutoGen framework, and we will explore the essential components & architecture of the AutoGen framework, along with its potential applications. So let’s begin.

AutoGen is an open-source framework developed by the team at Microsoft that equips developers with the power to create applications leveraging the power of LLMs using multiple agents that can have conversations with one another to successfully execute the desired tasks. Agents in AutoGen are conversable, customizable and they can operate in different modes that employ the combination of tools, human input, and LLMs. Developers can also use the AutoGen framework to define the interaction behavior of agents, and developers can use both computer code & natural language to program flexible conversation patterns deployed in various applications. Being an open source framework, AutoGen can be considered to be a generic framework that developers can use to build applications & frameworks of various complexities that leverage the power of LLMs.

Large language models are playing a crucial role in developing agents that make use of the LLM frameworks for adapting to new observations, tool usage, and reasoning in numerous real-world applications. But developing these applications that can leverage the full potential of LLM is a complex affair, and given the ever increasing demand and applications of LLMs along with the increase in task complexity, it is vital to scale up the power of these agents by using multiple agents that work in sync with one another. But how can a multi-agent approach be used to develop LLM-based applications that can then be applied to a wide array of domains with varying complexities? The AutoGen framework attempts to answer the above question by making the use of multi-agent conversations.

AutoGen : Components and Framework

In an attempt to reduce the amount of effort developers need to put in to create complex applications using LLM capabilities across a wide array of domains, the fundamental principle of AutoGen is to consolidate & streamline multi-agent workflows by making use of multi-agent conversations, thus also maximizing the reusability of these implemented agents. AutoGen uses multiple agents that can have conversations with one another to successfully execute the desired tasks, and the framework is built upon two fundamental concepts: Conversable Agents and Conversable Programming.

Conversable Agents

A conversable agent in AutoGen is an entity with a predefined role that can pass messages to send & receive information to & from other conversable agents. A conversable agent maintains its internal context based on received or sent messages, and developers can configure these agents to have a unique set of capabilities like being enabled by LLM tools, or taking human inputs.

Agent Capabilities Powered by Humans, Tools, and LLMs

An agent’s capabilities directly relates to how it processes & responds to messages which is the primary reason why the agents in the AutoGen framework allows developers the flexibility to endow various capabilities to their agents. AutoGen supports numerous common composable capabilities for agents that include

- LLMs: Agents backed by LLM exploit the capabilities of advanced LLM frameworks like implicit state interference, role playing, providing feedback, and even coding. Developers can use novel prompting techniques to combine these capabilities in an attempt to increase the autonomy or skill of an agent.

- Humans: Several applications desire or require some degree of human involvement, and the AutoGen framework allows LLM-based applications to facilitate human participation in agent conversation with the use of human-backed agents that could solicit human inputs during certain rounds of conversation on the basis of the configuration of the agent.

- Tools: Tools-backed agents usually have the capabilities to use code execution or function execution to execute tools.

Agent Cooperation and Customization

Based on the specific needs & requirements of an application, developers can configure individual agents to have a combination of essential back-end types to display the complex behavior involved in multi-agent conversations. The AutoGen framework allows developers to easily create agents having specialized roles and capabilities by extending or reusing the built-in agents. The figure attached below demonstrates the basic structure of built-in agents in the AutoGen framework. The ConversableAgent class can use humans, tools, and LLMs by default since it is the highest-level agent abstraction. The UserProxyAgent and the AssistantAgent are pre-configured classes of ConversableAgent, and each one of the them represents a common usage mode i.e each of these two agents acts as an AI assistant (when backed by LLMs), and solicits human input or executes function calls or codes ( when backed by tools and/or humans) by acting as a human proxy.

The figure below demonstrates how developers can use the AutoGen framework to develop a two-agent system that has a custom reply function, along with an illustration of the resulting automated agent chat that uses the two-agent system during the execution of the program.

By allowing the use of custom agents that can converse with one another, these conversable agents serve as a fundamental building block in the AutoGen framework. However, developers need to specify & mold these multi-agent conversations in order to develop applications where these agents are able to make substantial progress on the specified tasks.

Conversation Programming

To solve the problem stated above, the AutoGen framework uses conversation programming, a computing paradigm built on two essential concepts: computation, the actions taken by agents in a multi-agent conversation to compute their response and control flow, the conditions or sequence under which these computations take place. The ability to program these allows developers to implement numerous flexible multi-agent conversations patterns. Furthermore, in the AutoGen framework, the computations are conversation-centric. The actions taken by an agent are relevant to the conversations the agent is involved in, and the actions taken by the agents then result in the passing of messages for consequent conversations until the point when a termination condition is satisfied. Furthermore, control flow in the AutoGen framework is driven by conversations as it is the decision of the participating agents on which agents will be sending messages to & from the computation procedure.

The above figure demonstrates a simple illustration of how individual agents perform their role-specific operations, and conversation-centric computations to generate the desired responses like code execution and LLM interference calls. The task progresses ahead with the help of conversations that are displayed in the dialog box.

To facilitate conversation programming, the AutoGen framework features the following design patterns.

- Auto-Reply Mechanisms and Unified Interface for Automated Agent Chats

The AutoGen framework has a unified interface for performing the corresponding computation that is conversation-centric in nature including a “receive or send function” for either receiving or sending messages along with a “generate_reply” function that generates a response on the basis of the received message, and takes the required action. The AutoGen framework also introduces and deploys the agent-auto reply mechanism by default to realize the conversation-driven control.

- Control by Amalgamation of Natural Language and Programming

The AutoGen framework facilitates the usage of natural language & programming in various control flow management patterns that include: Natural language controls using LLMs, Programming-language control, and Control transition between programming and natural language.

Moving along, in addition to static conversations that are usually accompanied with a predefined flow, the AutoGen framework also supports dynamic conversation flows using multiple agents, and the framework provides developers with two options to achieve this

- By using function calls.

- By using a customized generate-reply function.

Applications of the AutoGen

In order to illustrate the potential of the AutoGen framework in the development of complex multi-agent applications, here are six potential applications of AutoGen that are selected on the basis of their relevance in the real world, problem solving capabilities enhanced by the AutoGen framework, and their innovative potential.

These six applications of the AutoGen framework are

- Math problem solving.

- Retrieval augmented chats.

- ALF chats.

- Multi-agent coding.

- Dynamic group chat.

- Conversational Chess.

Application 1 : Math Problem Solving

Mathematics is one of the foundational disciplines of leveraging LLM models to assist with solving complex mathematical problems that opens up a whole new world of potential applications including AI research assistance, and personalized AI tutoring.

The figure attached above demonstrates the application of the AutoGen framework to achieve competitive performance on solving mathematical problems.

Application 2: Question Answering and Retrieval-Augmented Code Generation

In the recent few months, Retrieval Augmented Code Generation has emerged as an effective & practical approach for overcoming the limitations of LLMs in incorporating external documents. The figure below demonstrates the application of the AutoGen framework for effective retrieval augmentation, and boosting performance on Q&A tasks.

Application 3: Decision Making in Text World Environments

The AutoGen framework can be used to create applications that work with online or interactive decision making. The figure below demonstrates how developers can use the AutoGen framework to design a three-agent conversational system with a grounding agent to significantly boost the performance.

Application 4: Multi-Agent Coding

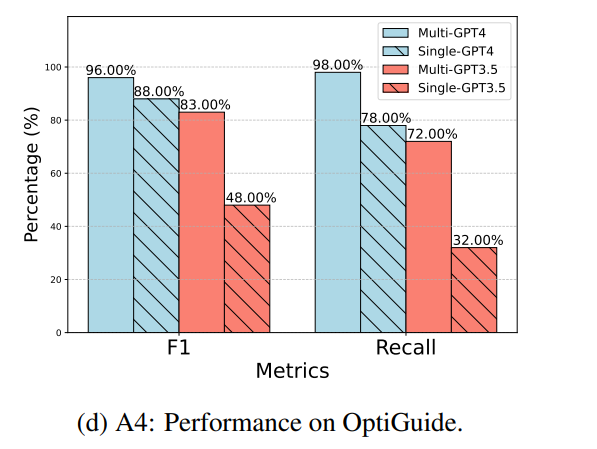

Developers working on the AutoGen framework can use the OptiGuide framework to build a multi-agent coding system that is capable of writing code to implement optimized solutions, and answering user questions. The figure below demonstrates that the use of the AutoGen framework to create a multi-agent design helps in boosting the overall performance significantly especially in performing coding tasks that require a safeguard.

Application 5: Dynamic Group Chat

The AutoGen framework provides support for a communication pattern revolving around dynamic group chats in which the participating multiple agents share the context, and instead of following a set of pre-defined orders, they converse with one another in a dynamic manner. These dynamic group chats rely on ongoing conversations to guide the flow of interaction within the agents.

The above figure illustrates how the AutoGen framework supports dynamic group chats between agents by making use of “GroupChatManager” , a special agent.

Application 6: Conversational Chess

The developers of the AutoGen framework used it to develop a Conversational Chess application that is a natural interference game that features built-in agents for players that can either be a LLM or human, and there is a also a third-party agent that provides relevant information, and validates the moves on the board on the basis of a set of predefined standard rules. The figure attached below demonstrates the Conversational Chess, a natural interference game built using the AutoGen framework that allows players to use jokes, character playing, or even meme references to express their moves creatively that makes the game of chess more interesting not only for the players, but also for the audience & observers.

Conclusion

In this article we have talked about AutoGen, an open source framework that uses the concepts of conversation programming & conversable agents that aims to simplify the orchestration and optimization of the LLM workflows by introducing automation to the workflow pipeline. The AutoGen framework offers conversable and customizable agents that leverage the power of advanced LLMs like GPT-3 and GPT-4, and at the same time, addressing their current limitations by integrating the LLMs with tools & human inputs by using automated chats to initiate conversations between multiple agents.

Although the AutoGen framework is still in its early experimental stages, it does pave the way for future explorations and research opportunities in the field, and AutoGen might be the tool that helps improve the speed, functionalities, and the ease of development of applications leveraging the capabilities of LLMs.

Credit: Source link

Comments are closed.