Facebook has teamed up with 13 universities in 9 countries to create the first-person perspective dataset Ego4D. It contains more than 700 project participants, wearing cameras and collecting 2200+ hours worth of videos from a first-person perspective. This massive collection of videos is more than 20 times larger than any previous version in terms of hours of footage. It will be a valuable resource for AI researchers who want their algorithms trained on human behavior.

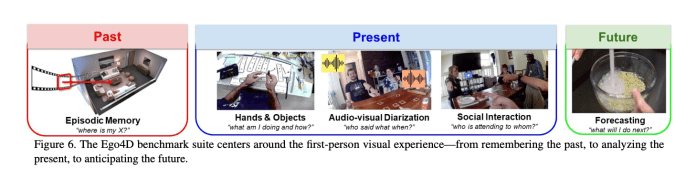

Facebook AI has created five benchmark challenges collaborating with the consortium and Facebook Reality Labs Research (FRL). These are designed to spur advancements toward real-world applications for future artificial intelligence assistants, which Ego4D’s benchmarks will power:

- Episodic memory: What happened when? (e.g., “Where did I leave my keys?”)

- Forecasting: What am I likely to do next? (e.g., “Wait, you’ve already added salt to this recipe.”)

- Hand and object manipulation: What am I doing? (e.g., “Teach me how to play the drums.”)

- Audio-visual diarization: Who said what when? (e.g., “What was the main topic during class?”)

- Social interaction: Who is interacting with whom? (e.g., “Help me better hear the person talking to me at this noisy restaurant.”)

The benchmarks will boost research on the building blocks necessary to develop smarter AI assistants that can understand and interact not just in this Metaverse, but also with AR or VR.

In the research article, the research team illustrates the example of riding a roller coaster and how different first-person perspective films are when compared to third person or even second personal viewings. They stressed that while it can be easy for humans who experience an event like this at face value (third-person), artificial intelligence does not yet understand what we do based on our personal points-of view; they will never know exactly where you’re looking because every human experiences things differently. It is possible to tie a computer vision system to a roller coaster in order for it take pictures, but the problem occurs when there are too many similar videos.

With Ego4D, researchers access the tools and benchmarks needed for AI innovation front-end perception research centered on the first person. Researchers mentioned that this would be crucial in promoting research into artificial intelligence as well as increasing front-end perception of what’s possible with current computer vision systems like those found within image recognition software or autonomous vehicles’ navigational systems, which often rely on databases such MNIST, COCO & ImageNet.

The cameras used for data captured the first-person, scriptless daily life of participants like shopping and cooking. These wearables also captured conversations in real-time without translation which could help the researchers better understand how people communicate around the world with one another as well as their own identities

While this new field has only just begun exploring human behavior, there are already many exciting advancements because it provides unparalleled insight not seen before or since Ego4d’s unprecedented scale & diversity.

The university consortium is set to release this data later this year. While the paper can be read with the below link.

Paper: https://arxiv.org/pdf/2110.07058.pdf

Project: https://ego4d-data.org/

References:

- https://ai.facebook.com/blog/teaching-ai-to-perceive-the-world-through-your-eyes/

- https://www.ithome.com.tw/news/147291

Suggested

Credit: Source link

Comments are closed.