In the novel 1984, Winston Smith knew better than to say out loud anything bad about Big Brother, but he appreciated that at least what he silently thought was beyond the surveillance capabilities of the Thought Police. That may not always be true in the future. A team of researchers from the University of Texas at Austin has developed a new artificial intelligence (AI) system that can translate a person’s brain activity into a stream of text while they listen to a story or silently imagine telling a story.

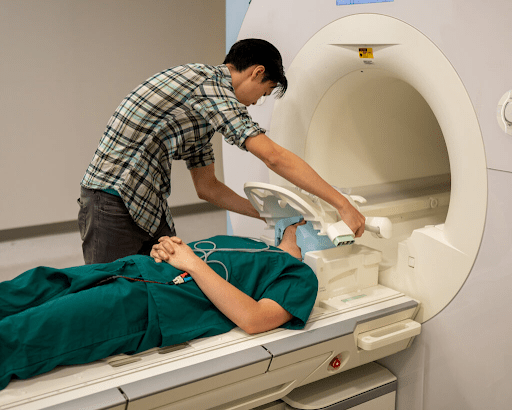

The system, called a semantic decoder, is far from being a surveillance tool but could be boon for people who are mentally aware but can’t speak. At present the technology only works in the lab when used by a person who is cooperating, but the researchers, doctoral student Jerry Tang and assistant professor Alex Huth, say it could be useful for people who are unable to speak due to conditions like strokes but are still mentally conscious. By analyzing brain activity using an fMRI scanner, the semantic decoder generates corresponding text without requiring surgical implants or a list of prescribed words.

Their study was published in the journal Nature Neuroscience.

Tang and Huth used a transformer model, similar to the ones that power Google’s Bard and Open AI’s ChatGPT. Unlike other language decoding systems, the semantic decoder can decode continuous language with complicated ideas, as opposed to just single words or short sentences.

The semantic decoder captures the essence of what is being said or thought rather than providing a word-for-word transcript. The researchers designed to capture the meaning of a participant’s thoughts and words imperfectly. However, the system’s accuracy is impressive, with the machine producing text that closely matches the intended meanings of the original words about 50% of the time. For example, when a participant listened to a speaker say, “I don’t have my driver’s license yet,” the decoder translated the thought to “She has not even started to learn to drive yet.” When another participant heard, “I didn’t know whether to scream, cry, or run away. Instead, I said, ‘Leave me alone!’”, the decoder produced “Started to scream and cry, and then she just said, ‘I told you to leave me alone.’”

The researchers were mindful of the technology’s potential misuse and addressed ethical concerns in the study. They stated that the decoding system only worked with cooperative participants who willingly participated in training the decoder. They also reported that the system was unusable when used on individuals who were not trained with the decoder or when trained participants resisted, such as by thinking other thoughts. The team emphasized that they worked to ensure the technology was only used when desired and could help individuals.

While the semantic decoder is not currently practical for use outside of a laboratory, the researchers believe it could transfer to other portable brain-imaging systems, such as functional near-infrared spectroscopy (fNIRS). “fNIRS measures where there’s more or less blood flow in the brain at different points in time, which, it turns out, is exactly the same kind of signal that fMRI is measuring,” said Huth. “So, our exact kind of approach should translate to fNIRS,” although the resolution with fNIRS would be lower.

The researchers also asked participants to watch four short, silent videos while in the scanner. The semantic decoder accurately described specific events from the videos using their brain activity. The development of this AI system was supported by the Whitehall Foundation, the Alfred P. Sloan Foundation, and the Burroughs Wellcome Fund.

Co-authors of the study include Amanda LeBel, a former research assistant in the Huth lab, and Shailee Jain, a computer science graduate student at UT Austin. Huth and Tang have filed a PCT patent application related to this work.

Credit: Source link

Comments are closed.