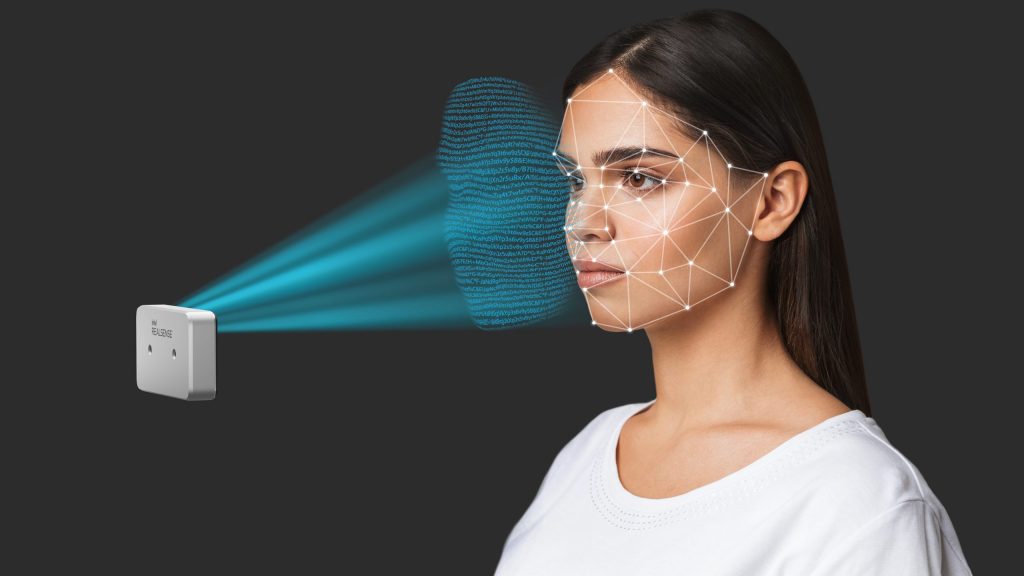

Intel RealSense is known in the robotics community for its plug-and-play stereo cameras. These cameras make gathering 3D depth data a seamless process, with easy integrations into ROS to simplify the software development for your robots. From the RealSense team, Joel Hagberg talks about how they built this product, which allows roboticists to perform computer vision and machine learning at the edge.

Joel Hagberg

Joel Hagberg leads the Intel® RealSense™ Marketing. Product Management and Customer Support teams. He joined Intel in 2018 after a few years as an Executive Advisor working with startups in the IoT, AI, Flash Array, and SaaS markets. Before his Executive Advisor role, Joel spent two years as Vice President of Product Line Management at Seagate Technology with responsibility for their $13B product portfolio. He joined Seagate from Toshiba, where Joel spent 4 years as Vice President of Marketing and Product Management for Toshiba’s HDD and SSD product lines. Joel joined Toshiba with Fujitsu’s Storage Business acquisition, where Joel spent 12 years as Vice President of Marketing, Product Management, and Business Development. Joel’s Business Development efforts at Fujitsu focused on building emerging market business units in Security, Biometric Sensors, H.264 HD Video Encoders, 10GbE chips, and Digital Signage. Joel earned his bachelor’s degree in Electrical Engineering and Math from the University of Maryland. Joel also graduated from Fujitsu’s Global Knowledge Institute Executive MBA leadership program.

Links

Transcript

hello and welcome to the robohub podcast

today we will be hearing about intel

realsense from joel hagberg

head of product management and marketing

for their computer vision product lines

at intel realsense they’ve developed a

series of camera products

which allow robots to leverage

off-the-shelf computer vision technology

with intel’s realsense technology robots

can generate

three-dimensional videos extracts for

little movement as people walk

detect gestures and objects and more

joel talks to our interview about a

about the value of leveraging tightly

integrated

hardware and software products for

startups and established companies alike

particularly to enable them to hit the

ground running

he also discusses the integration of

machine learning

into intel realsense products

hello welcome to robohub can you tell me

a little bit about yourself

yeah my name is joel hagberg i lead the

marketing product management and

customer support teams at intel

realsense

i’ve been here about three years uh

prior to that did a lot of consulting in

uh the mobile payment space and some

memory acceleration and also spent

20 plus years in high-tech product

management marketing for

storage devices flash memory

hard disk drives a lot of other things

and many building blocks are used in

robotics uh today and

what brought you to uh the real sense

team at intel

well i was uh looking i actually i was

doing some consulting getting some

uh flash advanced um

apache pass dimms from from intel for an

ai accelerator project

and was talking to their vp of sales and

he said you know we’re really looking

for

somebody come help with product

management marketing for

uh some emerging computer visions uh

products would you be interested in

talking to us

and as a consultant at the time i should

sure i’ll come in and talk and

as i uh met with real sense i realized

you know this technology

uh in real sense has been in this

business you know at the time was like

eight years

uh doing some really fantastic things

with

uh computer vision both uh kind of in a

bunch of different areas

at the time coded lights and stereo

products

and was looking to get into lidar and i

just felt like it’s that

kind of a nascent industry that’s

getting ready to

you know kind of become ubiquitous and

felt it was really a good time to get in

and learn

you know and and if you look at the use

cases robotics is the

largest use case for this and i felt

like well hey robotics is an area that’s

going to

see explosive growth computer vision

this energy explosive growth

auto you know assisted driving adas

you know autonomous vehicles and robots

and

and cars is that an area where we’re i

think it’s going to see tremendous

growth so

that’s really what got me excited to

join and it’s kind of kind of like a

startup within intel it’s part of the

emerging growth

and incubation group so it’s uh we have

an incubator we pull in a lot of other

you know technologies so you’ll see uh

intel does a lot of stuff with

um with uh computer vision whether it’s

lidar

radar and and uh the stereo condition

that we’re we’ll be talking about today

uh but uh we you know there’s a whole

team

and mobile line that’s doing stuff for

for vehicles so we’ve got a

uh a pretty broad you know kind of r d

team doing research our cto is extensive

experience

in in computer vision so it was just

felt like a

very exciting team to join and coming in

it is like a startup within this you

know the big

umbrella of intel but doing a lot of uh

very kind of moving very fast and and

making

uh you know uh changes quickly so that’s

one of the things that really appealed

to me about

the intel realsense team yeah

can you walk us through a couple of

products that are offered by realsense

sure if you look at at real sense uh

as as we you know look at the kind of

the use case of products there was a

series of products that were used uh

encoded light that were

installed in pcs used in very short

range

indoor applications and uh yeah so if

you look at the last 11 years we’ve

shipped over 2 million products into the

market

and coated light was kind of that early

phase probably in 2018 the stereo

products

were launched and i joined in 18 and the

stereo products the d400 family that we

have today

uh was starting to you know kind of see

some uh

growth in the uh both in that we have a

an e-commerce site so we were seeing

direct touch with customers

you know really startups working on new

projects we’re able to source directly

from our website

and then we sell through distribution so

a lot of specialty distributors that are

in the supplying the robotics

manufacturing

uh digital signage markets were

promoting our products so

uh we have a d400 family we have a d415

and d435 at the time which were

our our volume products uh the you know

d415

has a kind of a little bit shorter range

with a rolling shutter

and then the d435 has a

they’re both in the three meter range

but just seems like a little bit you

know

a little bit further range with the d430

f5 and it has a rolling shutter

and that that is the product that’s most

widely adopted

in the robotic space and if you look at

the way intel

you know goes to market we sell a

peripheral which is plug and play

you can start you know testing

immediately or you could take

uh if you want to look at kind of a

tighter integration you can buy a module

you can buy an ac card integrated

actually into your design

and enable you to reduce cost a bit or

you know change the look and feel of the

product

more to your to the specific oem’s uh

you know platform but those are the the

volume products that we launched

in 2018 which have been really widely

adopted into the

into the robotic space and this is a

series of stereo vision cameras that use

a light pattern that they emit onto the

scene and then

that that light pattern it’s an ir light

correct

yeah well if you look at it the stereo

cameras themselves

work in bright sunlight to

to dark so we do just take the you know

natural sunlight

images in through the stereo imagers

and those are able to you know take in

and create depth information

outside of you know uh needing an

illuminator and a projector

to uh to light up a scene so we do have

we do project a stereo a pattern which

the stereo

uh cameras use in low light conditions

or

or no light conditions so we are able to

use ir

so if you look at it we have kind of the

the data comes in through

the uh the the stereo sensors we have

both

rgb ir we have the projector

illuminators which

do light up the scene and project a

pattern so as you indicated with

is the ability to to see texture to see

things

using those uh that that pattern is

projected onto a scene

and i’ve actually used the intel

realsense d435i before

and one of the amazing things about it

you just buy a piece of hardware

connects by a usb very simple to set up

and um that like

that being able to connect something by

usb

and be super simple how has that

affected the design

of the products that you’ve built yeah i

think

that actually what you hit on is one of

the kind of the

the cornerstones of the intel approach

which is let’s make it very easy to get

up and started so you’re

whether you’re you know a researcher at

a university whether you’re a student in

high school

you can plug and play and and start

working immediately and we have

you know ctos of of startups to

to major corporations that like that

they can just buy a camera

plug it in and start testing immediately

and one of the things

in as i talked to the the design team

that they felt was really critical was

to have an open source

community and an open source sdk so we

built the the sdk 2.0 that we

launched that we support for our our

d400 family actually that

sdk applies to all of our cameras so if

you buy the

for example your d435 that you

referenced

a plug and play you can use code

examples from

that intel provides on our github site

or you can use code examples from the

community to get

immediately up and going you know you

might want to do you know

simultaneous slam and or visual slam

with

some algorithms that are available on

the site or you may want to

look at you know collision avoidance

with your robot

you know there’s things there or your

you have an articulated arm you want to

look at you know what code examples are

on there for object detection and so we

built this uh the sdk with the

thought hey let’s make it open to the

community to allow them

to learn from each other to

provide you know kind of support uh as a

broad sense to

to get up and going quicker and that’s

one of the thoughts in the design was

let’s make it

usb plug and play as a peripheral now we

do

see that once uh a company has

adopted it tested it and deployed it uh

depending upon the use case

there are people that like to integrate

it into their device

and for some uh you know

to reduce cost and to to uh to

make it look and feel like you know just

a single oem device with the camera

embedded in it

but in the case of some of the largest

robotics companies we

we have deploying it we’ll see them

still use the peripheral because it’s

calibrated

plugs in and it just works from from the

get-go and they can

they look at it and say hey intel you’re

the computer vision expert

we’re a robotics expert we’re going to

focus on the robotics operating system

the robotics

uh you know uh you know kind of the

the use case for this particular robot

and we’ll let you

worry about giving me the data so i can

make decisions

and so plug and play the peripheral

we’re finding even some

very large robotic manufacturers are

using the peripheral

in their design but we’ve got others

that are saying hey we’re going to a

high volume robot

for food delivery and you know we’re

going to be

you know going outdoors and we want to

embed

you know the module inside of an ip67

rated camera for out in the rain and

and so we have customers that are uh

integrating the product into their own

device in a robot

you know then in the use case of the of

a delivery robot for

you know outdoor food delivery or even

delivery inside of a restaurant or

delivery in a hospital

they’re integrating the module into

their design

in in multiple spots that way they’re

deploying uh they’re they’re able to see

multiple cameras inside of the design

and integrate it into their you know

their base

uh robot platform

yeah and i imagine that designing this

so that it can run off of usb and then

working within the power constraints of

uh what the usb cable can

output and also what the computer and

whatever is powering it

can actually give to over that usb cable

has forced you guys to make the design

very specialized to work off the usb

have there been any trade-offs that you

make and that maybe

as you see for some of these companies

that are trying to integrate this

into a single cohesive

product that doesn’t have these

limitations

yeah i think if you look at it from like

from a design

philosophy intel wanted to to build

a very high performance a computer depth

camera

that would give you very good quality

depth

with a an asic that does all the

calculations at the edge so basically

you’re able to do all that work inside

the camera

and then transfer the data and and we we

have a wide range of frame rates

and resolutions available so you can

really can

you kind of tune what you receive

at the system level uh based on your

requirements so so using the usb

bandwidth obviously

you know going to usb there’s other you

know bandwidth you do some trade-offs

like for ethernet you may have a

a trade-off and resolution so we do have

customers that that buy our

our cameras integrate them into an

ethernet enclosure for some

industrial applications uh we we do see

that but there are some trade-offs on

resolution so

it really is you know you look at the

end

user or the robot manufacturer will look

at it and say

well what do i need from the scene how

much information do i need for object

recognition how much

detail do i need for scanning a room

and building you know kind of a 3d map

of this environment

or to do visual slam what kind of amount

of data do i need what kind of frame

rate what kind of

resolution do i need to to build this 3d

model

so there so we’ve purposely built it

with a with that kind of flexibility to

you know dial up the frame rate or down

or

adjust the resolution up or down and by

keeping it

within that usb power mode we’re able to

to do all that at the edge and still

keep it a very low power you know 150 to

300 milliwatts i mean you’re looking at

a very low

power device and because we’re doing the

processing

within that asic you don’t really need a

a very high end graphics processor at

the system level

to do that work and i think you’ll look

at some other

solutions in the market where you’re

trying to pull data in

you’re you’re having you’re taxing your

system with a very you know a high-end

processor or graphics engine

to do that calculation that we’re

actually doing within the camera

and so realsense has done a really

excellent job at

integrating hardware and software and

then outputting that

over a common format

now how does machine learning and ai fit

into this story at real sense

yeah i think one of the things that

obviously

as we talked to our partners uh there’s

a lot of work

in ai inference engines and machine

learning happening within

the industry happening within an intel

and

uh with with our cameras the the

the ability to do all of this

very fast processing at the edge

really enables the the remote system

to make inference and decisions

on the fly because you’re getting this

data processing at the edge

so it does lend itself to a wide range

of ai applications and machine learning

where

you know the robot can course correct

whether it’s a drone

flying making judgments you know on on

at a high speed or it’s you know trying

to determine where to land

it can you know use its depth uh

information to make a decision on you

know safe places to land or

how to approach or how to avoid an

obstacle and

uh so those things are happening but we

also see a lot of

work with companies that are

doing this running algorithms for

object detection and in the robotic

space

you know if you look at e-commerce

there’s a significant because of the you

know significant growth especially with

coven

of you know e-commerce and ordering at

home and having stuff delivered to your

home office

there’s been a significant push at how

do we improve

the um the performance of

uh robotic arms for picking place and i

think

part of that is enable the machine

to have uh the ability to make a

decision based on what’s in a bin

okay knowing machine learning and

training

and there’s training algorithms you know

have built over

you know repetitive pattern repetitive

uh use

and uh and and and

uh kind of uh applica or data sets that

that may have been purchased or trained

within

one of our our partners that can allow

a pick and place robot to make better

decisions and then

you know we have companies like uh you

know right-hand robotics that are

are looking at how can we use a labor

multiplier and be able to use

you know one person to run multiple

robotic arms and

and as you improve your training

algorithms you then also improve the

efficiency of these robotic arms to make

decisions

which then can discern objects in a bin

recognize locations

and look at how best to pack you know

something for shipment and that would

allow an operator

to you know kind of supervise multiple

screens and

and if there’s a a problem it says okay

i see this robotic arm is having an

issue with this new object that’s coming

into

the test well we need to do some more

training on that particular object so

it’s

it allows the operator to then identify

things that are

troublesome for robotic arm to make a

decision

and then how to approach it how to how

to pick something up how to

you know discern one object from the

other

it’s something that machine learning i

think comes definitely into play and

then

i think the robotic arms and just the

you know kind of the

escalation of uh e-commerce demand has

really driven

how do we improve efficiency in this

machine learning algorithms to to really

train the arm to to be more efficient

and and

and deliver you know improved efficiency

throughout the supply chain and that’s

one of the things that i think when we

we started you know working on some of

these applications

we saw there’s a rippling effect okay if

you can improve efficiency at the

pick and play space well all of a sudden

then you need to improve efficiency on

moving those you know full boxes out

with robots and

now your amr robots become a

a a a much

more focused you know effort on how do

we okay how do we improve the amr

move away from you know liberal tags or

guided lines and guided vehicles to an

autonomous vehicles that can actually

move faster and make decisions uh

and and you know more safely how to stop

quickly

if you know some some person or some

other

object comes into you know an area where

they

felt they could move safely so so i

think machine learning

probably a long-winded answer to your

question i think we see

it kind of bubbling up in certain use

cases

where hey these training algorithms are

really delivering

value to the not only to the robotics

manufacturer

but to the end customer who ultimately

is saying hey here’s the roi i’m getting

by investing in these robots so the real

sense product line

is it’s great for being able to tag

you’ve got your depth data

on top of the video data sure you can

use that to train and tag these ml

algorithms

are you guys also developing these

algorithms

in-house and then making them stay

publicly available

for somebody who’s prototyping and they

want their depth camera but they also

want to

run some standard algorithms on it

object detection facial recognition

without having to rebuild the wheel or

go through a lot of documentation sure

yeah i think one of the things one of

the challenging things in the industry

is that

data sets and and the reality is that we

buy data sets ourselves to help train

algorithms for

certain applications and one of the

challenges is these are not

something we can pass on publicly right

so we we acquire a data set we

use it in an algorithm and for example

we’ve launched uh beginning this year

a facial authentication camera well part

of the last

few years of work has been we we had

uh we saw an increased use of our

standard

actually the d415 which is the

um a little lower cost uh

camera than the d435 and that product

comes in 149 dollar list versus the 179

list of the d435

that camera in its its module form again

for further cost reduction

and with its rolling shutter it has a

very good performance at

that short range we found it to be

starting to see significant uptick in

use

in facial authentication applications

with third-party fa software

well intel realsense at the time had

already been working on

uh facial authentication software for a

customer

who is looking to use our you know

cameras

in uh residential door locks so we

started

partnering with them and and realized

okay for us to really

build this out we really need to to

kind of build uh data sets and you know

buying data sets

and you know testing you know a wide

range

range of ethnicities and you know kind

of

you know and in capturing data in all

low light no light bright lights you

know backlight conditions

it is a pretty daunting task so over

a few years we’ve invested a lot of time

data collection ourselves you know with

tens of thousands of you know

individuals across the world of

different ethnicities

to build an algorithm into our

facial authentication camera so at the

beginning of you know

2021 we launched the interior real sense

id

which is a on-device facial

authentication

camera with an algorithm built into the

product so in that case we’ve invested a

lot of time and effort

to build a product which is a

very very high performance uh very low

cost low power

but also has significant anti-spoofing

and part of the anti-spoofing is

building that

data set and it is really a core ip

to our device that data set so so it’s

something that

you’ll find most companies that are are

doing

the the object recognition or facial

authentication

they’re not putting out those data sets

you know

for the public because there is ip and

and lots of uh time and money invested

to build that data set

so i think it’s it’s something i think

you’ll see there’s a lot of universities

out there that have

data sets available for object

recognition again you end up buying it

or

getting access it but one of the

stipulations is if you’re using it to

train your system

is you’re not going to monetize that set

or you’re not going to you know

you really need to go back to those

universities and and look for

you know what’s possible for you know a

startup or a

uh you know an individual researcher

what data sets are available

you know from the edu community that

might

enable you to do some some preliminary

work but i think

uh for us uh you know today

we have a lot of partners that are doing

object orientation very

uh with our cameras and they’re one of

their core ips is the dataset they built

you know that allows them to improve and

tune that object recognition

so the algorithms um that are the

results of the ml can be

run and given to the consumer but access

to that data set that’s the

gold mine that’s kept separate yeah i

think if you if

we look at our partners that are doing

object recognition and some of the other

uh uh applications that

they are you know training the device

with their data set and and they don’t

you know share that publicly

so i think it’s it’s it’s really one of

those things where i think as we look

forward

we’ve done some work with robots uh in

warehouses for

inventory management um and uh

actually dimensional weight for

measurement for

logistics and billing and shipping and

in that

instance you know robert can a robot can

go along scan a shelf and say

okay this sku number you know one two

three

is this size based on that palette

there’s 23

you know boxes in that so so a robot can

come do inventory

with that but we’ve had to train it with

an algorithm so when we go to a

to a warehouse partner we’re actually

building a data set

unique to them so we’re in in the

warehouse we deployed these for

say incoming inspection any new sku

comes in

it’s scanned we have a you know a

mounted we can have a desk mounted

device which or

or a table mounted device which is

scanning you know

individual packages we can have a cage

scanning

uh and we actually use we have a lidar

camera

which has very good edge fidelity that’s

been used in this

space our l515 lidar product we have

released a

dimensional weight software and now this

dimensionalization software is available

on our website as a trial

so people can pick it up and look at it

and so how do i do you know

uh you know volumetric billing well you

can use this to a very very quick

snapshot put a a uh package on it on the

table

and use our camera to take a quick look

at it and you know give you the volume

for for that legal for trade billing and

so

in that case you know we’re able to

to recognize the size and shape of a

skew

and we can build a database of a

particular

customer for their warehouse of all the

skus they they maintain

then they in turn can use that you know

use take that algorithm

that’s been trained around their unique

skus and have a robot

now do inventory do you know reordering

do

uh a kind of volumetric building as

things are going out to be loaded on a

truck

so there’s a lot of interesting use

cases but in a sense

one of the things that we’re enabling

with this dimensionally software is

the customer to build their own uh kind

of

data set that of their device of their

own unique

you know products that allows them to to

move forward

with a wide range of applications and

and we actually see you know customers

coming and saying okay this is

this is great and now i want to do i’m

moving a lot of things over conveyor

belts can you look at that so we have a

lot of

you know creative um ideas coming

out of the the initial dimensional

weight billing system

now it’s inventory control system but it

is in in a sense

you know building a a data set around a

unique

you know customer uh you know kind of

um environment and so we do see our

cameras being used in that case

and and for us it’s we’re enabling the

customer to build that data set

over time by capturing information use

of our cameras

yeah so realsense has been immensely

impactful for

the market of 3d reconstruction right

and

it still feels like a field that has a

lot of room to grow and become more

commonplace in product offerings

by various companies what would you how

would you describe the current state of

this market and where it will grow to

yeah i think we we see that there’s

there’s a number of you know partners

revised

like dot products that are building some

uh interesting handheld devices we’ve

got other companies that are

uh you know doing uh kind of 3d

reconstruction

of inspection so they might go into a

an environment after an earthquake and

do inspections

of structures like bridges and

and overpasses and look for structural

cracks in concrete

and they’re doing a very detailed 3d

scan

and today a lot of it is using our

stereo cameras which work

very well indoors and out we do see

others doing the the current lidar

offering the l515 is

like i said it’s very good for that edge

fidelity in the building

it’s also very good at scanning from

from distance

but today that is that l515 is an indoor

only product so if you’re doing

rick and you know reconstruction indoors

of a

of a house or an an indoor environment

lidar works really well and and i guess

again we are looking at the future how

do we expand

you know the the use of that lighter

beyond because if you look at

again there’s a lot of very high

performance outdoor lidar which is

very expensive you know five ten

thousand dollars and we’re talking about

a 349

lidar device which is uh gives you

excellent depth data

you know in that you know you know

forward uh kind of nine meter range

and um so yeah room the cons

reconstruction you’re talking about we

see

new construction um uh kind of uh

home real estate kind of applications we

actually have

some police using it for crime scene

reconstruction

uh so we see see these things coming and

we have a

company using it for ships for

inspection

of uh you know kind of structural

inspection and you know just even just

maintenance as is this area been painted

you know is is there any cracking or

peeling of paint as they scan

as they walk through with a handheld

scanner

uh the structure of a ship or an oil

field

you’re looking for you know potential

maintenance hazards on

pipes and things so so we’ve got

companies looking at

doing uh work with drones for field

inspection

uh scanning with tablets or robots

for sites and i think it’s as you

indicated it’s kind of a

it’s an emerging space um and we see

a a number of customers building these

these handheld or

robot mounted uh devices for the

application but i do think it’s a

it’s a it’s a still an early stage in

that we

if we look at our use cases you know

robotics is

by far the dominant use case i think if

you step down facial authentication

is is another very large use case for us

we see

you know deployed in point of sale atm

systems around the world

people are starting to use face

authentication uh door

uh kind of corporate access control

instead of the

you know the badges that you badge in

you’re now people are scanning faces as

they walk the doors

and we see it deployed in residential

home locks and we’ve already launched

some residential home blocks with

partners in china

we do see them coming to you know the

rest of the world

over time uh for facial authentication

so that’s kind of the second

big bucket of use case and then um

next is scanning so that the scanning

you’re talking about you know we do see

you know body scanning room scanning uh

you know

inspection uh you know and menu

construction

kind of scanning we’ve got customers

using it to

scan a body into avatars for

for gaming uh we see health clubs uh

scanning you know doing a complete you

know 360 scan of a person to look at you

know

how their inches are reducing over the

course of their workout or how their

muscles are expanding

so those are areas where scanning is

starting to be used

and we also see recognition interaction

as another

you know large use case for us and and

that i think that one when you look at

scanning i think it’s it’s it’s a it’s

it’s emerging i think recognition

interaction is is a bigger space for us

today

because we see it in um uh educational

displays

interactive displays where k through 12

you know education where especially in

the you know k

through uh eight uh you know schools

around the world

where they put a a digital sign up put

one of our cameras on top

there’s a number of companies doing

these educational programs where kids

really have interactive play to learn

language or math

with you know using gesture recognition

with that digital sign also

with the recent coping 19 kind of

concerns about

transfer virus uh touch screens have

gotten a lot of

concern over from a number of our

customers so using our cameras to kind

of put a

virtual screen in front of a touch

screen so you can then

get close but not touch it and still

have that that same

user interaction of a touch screen and

you get close maybe a green

dot pops up and it acknowledges you just

selected that

particular fast food item we see we’re

starting to see those

start to be deployed so i think

recognition interaction is an area where

it’s kind of a broad area because it’s

those

interactions with digital signs

interactive with kiosks or

displays but also retail analytics is a

big growth area where people are

mounting

cameras in the ceiling just to track uh

you know customer flow or

customer movement through a department

store to just

to understand you know how they should

lay things out or we also have the

kind of the pay-as-you-go you know the

cashier-less store

where a lot of our cameras are mounted

in the ceiling and customers are able to

you know walk through grab an item and

the camera depth camera can know hey

that was you know shelf number three

and other cameras can triangulate and

say yes that’s the item that was picked

and

charge you as you go out the door so we

see

you know that retail analytics uh the

pointless

you know kind of that the the the uh the

facial authentication

robotics of course is still the biggest

growth you know spot force and the

biggest you know

use case for our products but we see

these other ones starting to to emerge

and i think the

the question you asked about machine

learning i think there’s definitely

a number of customers who are looking at

how can we get more intelligence

at the edge and how can we do more

decision making at the edge and that may

come with you know

surveillance cameras trying to discern

is that a human being or an animal

that’s walking across that

you know yard or is it in a warehouse is

this

you know robot that’s moving along as a

security guard make a decision

is there any you know other movement the

area that’s not

typical is there a person you know a

human being you know enter the

environment where they

we don’t expect one so having a depth

camera

that can make a decision at the edge and

send an alert up rather than

24-hour video feed of everything well

here’s the one

moment that we see something out of the

ordinary make a decision and send that

up so

adding more intelligence to the edge i

think is something that you’ll see

coming

and i i think you know your machine

learning question i think is really

how does the how do we put more

intelligence at the edge of something

we’re still investigating as

as as an industry and i think you’ll see

you know advances coming along along the

way there

awesome and last question for you sure

what’s top of mind for you

at real sense i think when at real sense

obviously

the way we’ve designed uh the cameras is

that

you know we’re gonna ensure that it’s

future proof so if you build

you know something around one of our

cameras and you decide yeah i really

need to

go longer range i really you know need

to move from stereo to lidar for this

application

the development work you’ve done is is

future proof that it’s going to

plug and play with the next camera and

that’s so for us as we look to the next

generation

uh you know this year we launched uh you

know

the um the facial authentication kind of

an extension

of use of depth to to provide

anti-spoofing in that face the kind of

facial authentication space we’ve also

launched a touchless control software so

we’re looking at how do we augment

and enhance the the offerings with

software and other

maybe algorithms that can enable our our

partners to build

you know unique solutions on top of but

we’re also having

have extended the the the cameras range

we introduced a d455 which gives you

kind of instead of a three meter range

you’re now at a six meter range and so

we’re continuing to look at

how do we improve the performance at

distance how can we make

you know let your robot move faster the

further you can see the

faster you can make a decision on when

you need to stop so

maybe you can move a little faster and

if you you’ve got that little bit

longer range so i think we’re continuing

you know to look

and to work with our customers on their

particular

new concerns whether they’re looking at

alternative interfaces

longer range or other

other things that they can think of you

know for example calibration

has been an issue with stereo cameras of

the year so intel worked on

a self calibration so we have health

check

that our cameras can do on their own so

the camera can calibrate itself

you know res so we’ve given

over time as we work with our customers

we see hey what challenges are you

having

and how can intel help you you know uh

you know ease your support requirements

uh on the use of depth cameras since

that’s really a goal for us is

not only to extend the portfolio but how

do we improve

the current you know cameras to ensure

it’s easier for the robotics customers

to be able to

adopt our solution and uh and improve

the performance of their end device

which we want to be the computer vision

experts

to enable that that customer to be the

robotics expert

awesome thank you very much for speaking

with us today yeah about it was

definitely uh appreciate the the chance

to get here

then and uh join the podcast and i look

forward to uh

to watching many more uh episodes of

your podcast in the future

thank you very much see ya okay thank

you take care

we hope you enjoyed listening to joel

hagberg discuss the latest updates from

intel realsense

there’s plenty more to discover at

robohub.org forward slash

podcast including information about how

you can become

a patron for robohub as a

community-sponsored podcast

we are run by a team of volunteers from

around the globe and we rely on small

donations from listeners like yourself

to help us keep going

so check out how you can get involved

and become a supporter patron

or volunteer at robohub.org forward

slash podcast

our next episode will air in two weeks

time until then

goodbye

All audio interviews are transcribed and edited for clarity with great care, however, we cannot assume responsibility for their accuracy.

Credit: Source link

Comments are closed.