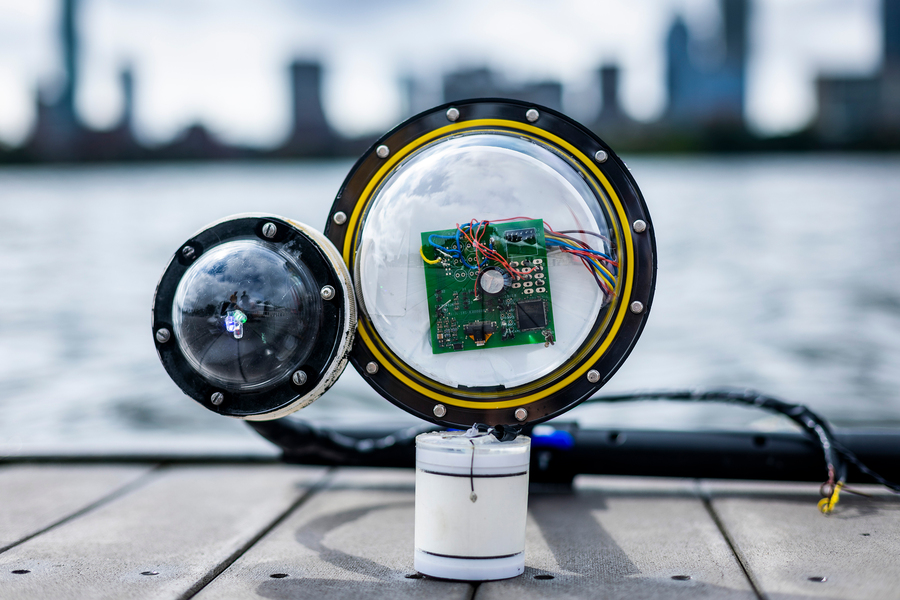

A battery-free, wireless underwater camera developed at MIT could have many uses, including climate modeling. “We are missing data from over 95 percent of the ocean. This technology could help us build more accurate climate models and better understand how climate change impacts the underwater world,” says Associate Professor Fadel Adib. Image: Adam Glanzman

By Adam Zewe | MIT News Office

Scientists estimate that more than 95 percent of Earth’s oceans have never been observed, which means we have seen less of our planet’s ocean than we have the far side of the moon or the surface of Mars.

The high cost of powering an underwater camera for a long time, by tethering it to a research vessel or sending a ship to recharge its batteries, is a steep challenge preventing widespread undersea exploration.

MIT researchers have taken a major step to overcome this problem by developing a battery-free, wireless underwater camera that is about 100,000 times more energy-efficient than other undersea cameras. The device takes color photos, even in dark underwater environments, and transmits image data wirelessly through the water.

The autonomous camera is powered by sound. It converts mechanical energy from sound waves traveling through water into electrical energy that powers its imaging and communications equipment. After capturing and encoding image data, the camera also uses sound waves to transmit data to a receiver that reconstructs the image.

Because it doesn’t need a power source, the camera could run for weeks on end before retrieval, enabling scientists to search remote parts of the ocean for new species. It could also be used to capture images of ocean pollution or monitor the health and growth of fish raised in aquaculture farms.

“One of the most exciting applications of this camera for me personally is in the context of climate monitoring. We are building climate models, but we are missing data from over 95 percent of the ocean. This technology could help us build more accurate climate models and better understand how climate change impacts the underwater world,” says Fadel Adib, associate professor in the Department of Electrical Engineering and Computer Science and director of the Signal Kinetics group in the MIT Media Lab, and senior author of a new paper on the system.

Joining Adib on the paper are co-lead authors and Signal Kinetics group research assistants Sayed Saad Afzal, Waleed Akbar, and Osvy Rodriguez, as well as research scientist Unsoo Ha, and former group researchers Mario Doumet and Reza Ghaffarivardavagh. The paper is published in Nature Communications.

Going battery-free

To build a camera that could operate autonomously for long periods, the researchers needed a device that could harvest energy underwater on its own while consuming very little power.

The camera acquires energy using transducers made from piezoelectric materials that are placed around its exterior. Piezoelectric materials produce an electric signal when a mechanical force is applied to them. When a sound wave traveling through the water hits the transducers, they vibrate and convert that mechanical energy into electrical energy.

Those sound waves could come from any source, like a passing ship or marine life. The camera stores harvested energy until it has built up enough to power the electronics that take photos and communicate data.

To keep power consumption as a low as possible, the researchers used off-the-shelf, ultra-low-power imaging sensors. But these sensors only capture grayscale images. And since most underwater environments lack a light source, they needed to develop a low-power flash, too.

“We were trying to minimize the hardware as much as possible, and that creates new constraints on how to build the system, send information, and perform image reconstruction. It took a fair amount of creativity to figure out how to do this,” Adib says.

They solved both problems simultaneously using red, green, and blue LEDs. When the camera captures an image, it shines a red LED and then uses image sensors to take the photo. It repeats the same process with green and blue LEDs.

Even though the image looks black and white, the red, green, and blue colored light is reflected in the white part of each photo, Akbar explains. When the image data are combined in post-processing, the color image can be reconstructed.

“When we were kids in art class, we were taught that we could make all colors using three basic colors. The same rules follow for color images we see on our computers. We just need red, green, and blue — these three channels — to construct color images,” he says.

Fadel Adib (left) associate professor in the Department of Electrical Engineering and Computer Science and director of the Signal Kinetics group in the MIT Media Lab, and Research Assistant Waleed Akbar display the battery-free wireless underwater camera that their group developed. Image: Adam Glanzman

Sending data with sound

Once image data are captured, they are encoded as bits (1s and 0s) and sent to a receiver one bit at a time using a process called underwater backscatter. The receiver transmits sound waves through the water to the camera, which acts as a mirror to reflect those waves. The camera either reflects a wave back to the receiver or changes its mirror to an absorber so that it does not reflect back.

A hydrophone next to the transmitter senses if a signal is reflected back from the camera. If it receives a signal, that is a bit-1, and if there is no signal, that is a bit-0. The system uses this binary information to reconstruct and post-process the image.

“This whole process, since it just requires a single switch to convert the device from a nonreflective state to a reflective state, consumes five orders of magnitude less power than typical underwater communications systems,” Afzal says.

The researchers tested the camera in several underwater environments. In one, they captured color images of plastic bottles floating in a New Hampshire pond. They were also able to take such high-quality photos of an African starfish that tiny tubercles along its arms were clearly visible. The device was also effective at repeatedly imaging the underwater plant Aponogeton ulvaceus in a dark environment over the course of a week to monitor its growth.

Now that they have demonstrated a working prototype, the researchers plan to enhance the device so it is practical for deployment in real-world settings. They want to increase the camera’s memory so it could capture photos in real-time, stream images, or even shoot underwater video.

They also want to extend the camera’s range. They successfully transmitted data 40 meters from the receiver, but pushing that range wider would enable the camera to be used in more underwater settings.

“This will open up great opportunities for research both in low-power IoT devices as well as underwater monitoring and research,” says Haitham Al-Hassanieh, an assistant professor of electrical and computer engineering at the University of Illinois Urbana-Champaign, who was not involved with this research.

This research is supported, in part, by the Office of Naval Research, the Sloan Research Fellowship, the National Science Foundation, the MIT Media Lab, and the Doherty Chair in Ocean Utilization.

tags: c-Research-Innovation

MIT News

Credit: Source link

Comments are closed.