Prof Brendan Englot, from Stevens Institute of Technology, discusses the challenges in perception and decision-making for underwater robots – especially in the field. He discusses ongoing research using the BlueROV platform and autonomous driving simulators.

Brendan Englot

Brendan Englot received his S.B., S.M., and Ph.D. degrees in mechanical engineering from the Massachusetts Institute of Technology in 2007, 2009, and 2012, respectively. He is currently an Associate Professor with the Department of Mechanical Engineering at Stevens Institute of Technology in Hoboken, New Jersey. At Stevens, he also serves as interim director of the Stevens Institute for Artificial Intelligence. He is interested in perception, planning, optimization, and control that enable mobile robots to achieve robust autonomy in complex physical environments, and his recent work has considered sensing tasks motivated by underwater surveillance and inspection applications, and path planning with multiple objectives, unreliable sensors, and imprecise maps.

Links

transcript

[00:00:00]

Lilly: Hi, welcome to the Robohub podcast. Would you mind introducing yourself?

Brendan Englot: Sure. Uh, my name’s Brendan Englot. I’m an associate professor of mechanical engineering at Stevens Institute of technology.

Lilly: Cool. And can you tell us a little bit about your lab group and what sort of research you’re working on or what sort of classes you’re teaching, anything like that?

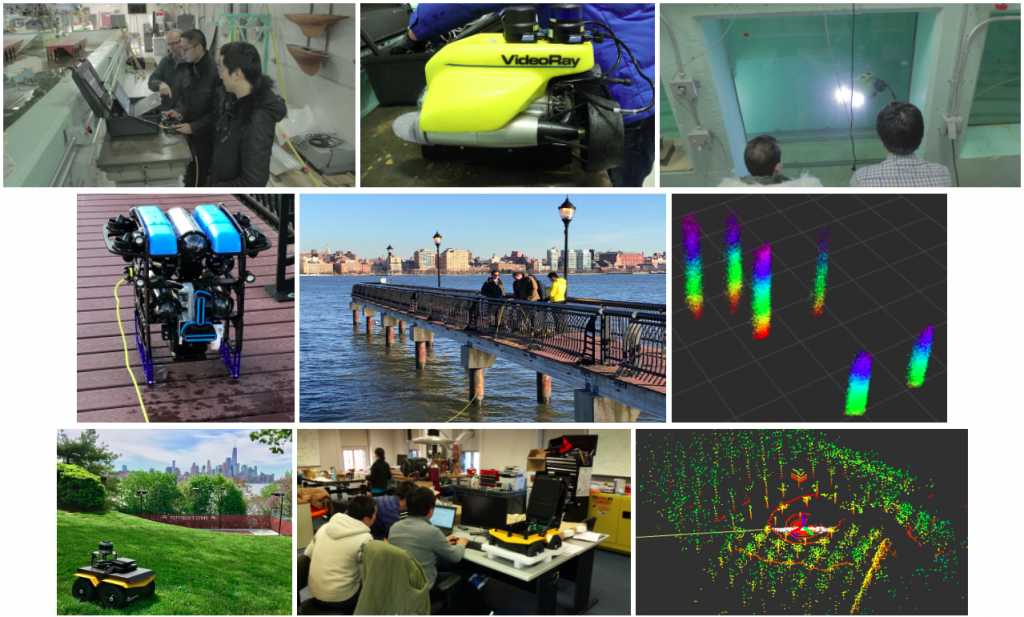

Brendan Englot: Yeah, certainly, certainly. My research lab, which has, I guess, been in existence for almost eight years now, um, is called the robust field autonomy lab, which is kind of, um, an aspirational name, reflecting the fact that we would like mobile robotic systems to achieve robust levels of, of autonomy. And self-reliance in, uh, challenging field environments.

And in particular, um, one of the, the toughest environments that we focus on is, uh, underwater. We would really like to be able to equip mobile underwater robots with the perceptual and decision making capabilities needed to operate reliably in cluttered underwater environments, where they have to operate in close proximity to other, uh, other structures or other robots.

Um, our work also, uh, encompasses other types of platforms. Um, we also, uh, study ground robotics and we think about many instances in which ground robots might be GPS denied. They might have to go off road, underground, indoors, and outdoors. And so they may not have, uh, a reliable position fix. They may not have a very structured environment where it’s obvious, uh, which areas of the environment are traversable.

So across both of those domains, we’re really interested in perception and decision making, and we would like to improve the situational awareness of these robots and also improve the intelligence and the reliability of their decision making.

Lilly: So as a field robotics researcher, can you talk a little bit about the challenges, both technically in the actual research elements and sort of logistically of doing field robotics?

Brendan Englot: Yeah, yeah, absolutely. Um, It it’s a humbling experience to take your systems out into the field that have, you know, you’ve tested in simulation and worked perfectly. You’ve tested them in the lab and they work perfectly, and you’ll always encounter some unique, uh, combination of circumstances in the field that, that, um, Shines a light on new failure modes.

And, um, so trying to imagine every failure mode possible and be prepared for it is one of the biggest challenges I think, of, of field robotics and getting the most out of the time you spend in the field, um, with underwater robots, it’s especially challenging because it’s hard to practice what you’re doing, um, and create the same conditions in the lab.

Um, we have access to a water tank where we can try to do that. Even then, uh, we, we work a lot with acoustic, uh, perceptual and navigation sensors, and the performance of those sensors is different. Um, we really only get to observe those true conditions when we’re in the field and that time comes at, uh, it’s very precious time when all the conditions are cooperating, when you have the right tides, the right weather, um, and, uh, you know, and everything’s able to run smoothly and you can learn from all of the data that you’re gathering.

So, uh, you know, just every, every hour of data that you can get under those conditions in the field that will really be helpful, uh, to support your further, further research, um, is, is precious. So, um, being well prepared for that, I guess, is as much of a, uh, science as, as doing the research itself. And, uh, trying to figure out, I guess probably the most challenging thing is figuring out what is the perfect ground control station, you know, to give you everything that you need on the field experiment site, um, laptops, you know, computationally, uh, power wise, you know, you may not be in a location that has plugin power.

How much, you know, uh, how much power are you going to need and how do you bring the required resources with you? Um, even things as simple as being able to see your laptop screen, you know, uh, making sure that you can manage your exposure to the elements, uh, work comfortably and productively and manage all of those [00:05:00] conditions of, uh, of the outdoor environment.

Is really challenging, but, but it’s also really fun. I, I think it’s a very exciting space to be working in. Cuz there are still so many unsolved problem.

Lilly: Yeah. And what are some of those? What are some of the unsolved problems that are the most exciting to you?

Brendan Englot: Well, um, right now I would say in our, in our region of the US in particular, you know, I I’ve spent most of my career working in the Northeastern United States. Um, we do not have water that is clear enough to see well with a camera, even with very good illumination. Um, you’re, you really can only see a, a few inches in front of the camera in many situations, and you need to rely on other forms of perceptual sensing to build the situational awareness you need to operate in clutter.

So, um, we rely a lot on sonar, um, but even, even then, even when you have the very best available sonars, um, Trying to create the situational awareness that like a LIDAR equipped ground vehicle or a LIDAR and camera equipped drone would have trying to create that same situational awareness underwater is still kind of an open challenge when you’re in a Marine environment that has very high turbidity and you can’t see clearly.

Lilly: um, I, I wanted to go back a little bit. You mentioned earlier that sometimes you get an hour’s worth of data and that’s a very exciting thing. Um, how do you best, like, how do you best capitalize on the limited data that you have, especially if you’re working on something like decision making, where once you’ve made a decision, you can’t take accurate measurements of any of the decisions you didn’t make?

Brendan Englot: Yeah, that’s a great question. So especially, um, research involving robot decision making. It’s, it’s hard to do that because, um, yeah, you would like to explore different scenarios that will unfold differently based on the decisions that you make. So there is a only a limited amount we can do there, um, to.

To give, you know, give our robots some additional exposure to decision making. We also rely on simulators and we do actually, the pandemic was a big motivating factor to really see what we could get out of a simulator. But we have been working a lot with, um, the suite of tools available in Ross and gazebo and using, using tools like the UU V simulator, which is a gazebo based underwater robot simulation.

Um, the, the research community has developed some very nice high fidelity. Simulation capabilities in there, including the ability to simulate our sonar imagery, um, simulating different water conditions. And we, um, we actually can run our, um, simultaneous localization and mapping algorithms in a simulator and the same parameters and same tuning will run in the field, uh, the same way that they’ve been tuned in the simulator.

So that helps with the decision banking part, um, with the perceptual side of things. We can find ways to derive a lot of utility out of one limited data set. And one, one way we’ve done that lately is we’re very interested also in multi-robot navigation, multi-robot slam. Um, we, we realize that for underwater robots to really be impactful, they’re probably going to have to work in groups in teams to really tackle complex challenges and in Marine environments.

And so we have actually, we’ve been pretty successful at taking. Kind of limited single robot data sets that we’ve gathered in the field in nice operating conditions. And we have created synthetic multi-robot data sets out of those where we might have, um, Three different trajectories that a single robot traversed through a Marine environment in different starting and ending locations.

And we can create a synthetic multi-robot data set, where we pretend that those are all taking place at the same time, uh, even creating the, the potential for these robots to exchange information. Share sensor observations. And we’ve even been able to explore some of the decision making related to that regarding this very, very limited acoustic bandwidth.

You have, you know, if you’re an underwater system and you’re using an acoustic modem to transmit data wirelessly without having to come to the surface, that bandwidth is very limited and you wanna make sure you. Put it to the best use. So we’ve even been able to explore some aspects of decision making regarding when do I send a message?

Who do I send it to? Um, just by kind of playing back and reinventing and, um, making additional use out of those previous data sets.

Lilly: And can you simulate that? Um, Like messaging in, in the simulators that you mentioned, or how much of the, um, sensor suites and everything did you have to add on to existing simulation capabil?

Brendan Englot: I admittedly, we don’t have the, um, the full physics of that captured and there are, I’ll be the first to admit there are a lot. Um, environmental phenomena that can affect the quality of wireless communication underwater and, uh, the physics of [00:10:00] acoustic communication will, uh, you know, the will affect the performance of your comms based on how, how it’s interacting with the environment, how much water depth you have, where the surrounding structures are, how much reverberation is taking place.

Um, right now we’re just imposing some pretty simple bandwidth constraints. We’re just assuming. We have the same average bandwidth as a wireless acoustic channel. So we can only send so much imagery from one robot to another. So it’s just kind of a simple bandwidth constraint for now, but we hope we might be able to capture more realistic constraints going forward.

Lilly: Cool. And getting back to that decision making, um, what sort of problems or tasks are your robots seeking to do or solve? And what sort of applications

Brendan Englot: Yeah, that’s a great question. There, there are so many, um, potentially relevant applications where I think it would be useful to have one robot or maybe a team of robots that could, um, inspect and monitor and then ideally intervene underwater. Um, my original work in this space started out as a PhD student where I studied.

Underwater ship haul inspection. That was, um, an application that the Navy, the us Navy cared very much about at the time and still does of, um, trying to have an underwater robot. They could emulate what a, what a Navy diver does when they search a ship’s haul. Looking for any kind of anomalies that might be attached to the hu.

Um, so that sort of complex, uh, challenging inspection problem first motivated my work in this problem space, but beyond inspection and just beyond defense applications, there are other, other applications as well. Um, there is right now so much subs, sub sea oil and gas production going on that requires underwater robots that are mostly.

Tele operated at this point. So if, um, additional autonomy and intelligence could be, um, added to those systems so that they could, they could operate without as much direct human intervention and supervision. That could improve the, the efficiency of those kind of, uh, operations. There is also, um, increasing amounts of offshore infrastructure related to sustainable, renewable energy, um, offshore wind farms.

Um, in my region of the country, those are being new ones are continuously under construction, um, wave energy generation infrastructure. And another area that we’re focused on right now actually is, um, aquaculture. There’s an increasing amount of offshore infrastructure to support that. Um, and, uh, we also, we have a new project that was just funded by, um, the U S D a actually.

To explore, um, resident robotic systems that could help maintain and clean and inspect an offshore fish farm. Um, since there is quite a scarcity of those within the United States. Um, and I think all of the ones that we have operating offshore are in Hawaii at the moment. So, uh, I think there’s definitely some incentive to try to grow the amount of domestic production that happens at, uh, offshore fish farms in the us.

Those are, those are a few examples. Uh, as we get closer to having a reliable intervention capability where underwater robots could really reliably grasp and manipulate things and do it with increased levels of autonomy, maybe you’d also start to see things like underwater construction and decommissioning of serious infrastructure happening as well.

So there’s no shortage of interesting challenge problems in that domain.

Lilly: So this would be like underwater robots working together to build these. Culture forms.

Brendan Englot: Uh, perhaps perhaps, or the, the, really some of the toughest things to build that we do, that we build underwater are the sites associated with oil and gas production, the drilling sites, uh, that can be at very great depths. You know, near the sea floor in the Gulf of Mexico, for example, where you might be thousands of feet down.

And, um, it’s a very challenging environment for human divers to operate and conduct their work safely. So, um, uh, lot of interesting applications there where it could be useful.

Lilly: How different is robotic operations, teleoperated, or autonomous, uh, at shallow waters versus deeper waters.

Brendan Englot: That’s a good question. And I’ll, I’ll admit before I answer that, that most of the work we do is proof of concept work that occurs at shallow in shallow water environments. We’re working with relatively low cost platforms. Um, primarily these days we’re working with the blue ROV platform, which has been.

A very disruptive low cost platform. That’s very customizable. So we’ve been customizing blue ROVs in many different ways, and we are limited to operating at shallow depths because of that. Um, I guess I would argue, I find operating in shallow waters, that there are a lot of challenges there that are unique to that setting because that’s where you’re always gonna be in close proximity to the shore, to structures, to boats, to human activity.

To, [00:15:00] um, surface disturbances you’ll be affected by the winds and the weather conditions. Uh, there’ll be cur you know, problematic currents as well. So all of those kind of environmental disturbances are more prevalent near the shore, you know, near the surface. Um, and that’s primarily where I’ve been focused.

There might be different problems operating at greater depths. Certainly you need to have a much more robustly designed vehicle and you need to think very carefully about the payloads that it’s carrying the mission duration. Most likely, if you’re going deep, you’re having a much longer duration mission and you really have to carefully design your system and make sure it can, it can handle the mission.

Lilly: That makes sense. That’s super interesting. So, um, what are some of the methodologies, what are some of the approaches that you currently have that you think are gonna be really promising for changing how robots operate, even in these shallow terrains?

Brendan Englot: Um, I would say one of the areas we’ve been most interested in that we really think could have an impact is what you might call belief, space planning, planning under uncertainty, active slam. I guess it has a lot of different names, maybe the best way to refer to it would be planning under uncertainty in this domain, because I.

It really, it, maybe it’s underutilized right now on hardware, you know, on real underwater robotic systems. And if we can get it to work well, um, I think on real underwater robots, it could be very impactful in these near surface nearshore environments where you’re always in close proximity to other.

Obstacles moving vessels structures, other robots, um, just because localization is so challenging for these underwater robots. Um, if, if you’re stuck below the surface, you know, your GPS denied, you have to have some way to keep track of your state. Um, you might be using slam. As I mentioned earlier, that’s something we’re really interested in in my lab is developing more reliable, sonar based slam.

Also slam that could benefit from, um, could be distributed across a multi-robot system. Um, If we can, if we can get that working reliably, then using that to inform our planning and decision making will help keep these robots safer and it will help inform our decisions about when, you know, if we really wanna grasp or try to manipulate something underwater steering into the right position, making sure we have enough confidence to be very close to obstacles in this disturbance filled environment.

I think it has the potential to be really impactful there.

Lilly: talk a little bit more about sonar based?

Brendan Englot: Sure. Sure. Um, some of the things that maybe are more unique in that setting is that for us, at least everything is happening slowly. So the robots moving relatively slowly, most of the time, maybe a quarter meter per second. Half a meter per second is probably the fastest you would move if you were, you know, really in a, in an environment where you’re in close proximity to obstacles.

Um, because of that, we have a, um, much lower rate, I guess, at which we would generate the key frames that we need for slam. Um, there’s always, and, and also it’s a very feature, poor feature sparse kind of environment. So the, um, perceptual observations that are helpful for slam will always be a bit less frequent.

Um, so I guess one unique thing about sonar based underwater slam is that. We need to be very selective about what observations we accept and what potential, uh, correspondences between soar images. We accept and introduce into our solution because one bad correspondence could be, um, could throw off the whole solution since it’s really a feature feature sparse setting.

So I guess we’re very, we things go slowly. We generate key frames for slam at a pretty slow. And we’re very, very conservative about accepting correspondences between images as place recognition or loop closure constraints. But because of all that, we can do lots of optimization and down selection until we’re really, really confident that something is a good match.

So I guess those are kind of the things that uniquely defined that problem setting for us, um, that make it an interesting problem to work on.

Lilly: and the, so the pace of the sort of missions that you’re considering is it, um, I imagine that during the time in between being able to do these optimizations and these loop closures, you’re accumulating error, but that robots are probably moving fairly slowly. So what’s sort of the time scale that you’re thinking about in terms of a full mission.

Brendan Englot: Hmm. Um, so I guess first the, the limiting factor that even if we were able to move faster is a constrain, is we get our sonar imagery at a rate of [00:20:00] about 10 Hertz. Um, but, but generally the, the key frames we identify and introduce into our slam solution, we generate those usually at a rate of about, oh, I don’t.

It could be anywhere from like two Hertz to half a Hertz, you know, depending. Um, because, because we’re usual, usually moving pretty slowly. Um, I guess some of this is informed by the fact that we’re often doing inspection missions. So we, although we are aiming and working toward underwater manipulation and intervention, eventually I’d say these days, it’s really more like mapping.

Serving patrolling inspection. Those are kind of the real applications that we can achieve with the systems that we have. So, because it’s focused on that building the most accurate high resolution maps possible from the sonar data that we have. Um, that’s one reason why we’re moving at a relatively slow pace, cuz it’s really the quality of the map is what we care about.

And we’re beginning to think now also about how we can produce dense three dimensional maps with. With the sonar systems with our, with our robot. One fairly unique thing we’re doing now also is we actually have two imaging sonars that we have oriented orthogonal to one, another working as a stereo pair to try to, um, produce dense 3d point clouds from the sonar imagery so that we can build higher definition 3d maps.

Hmm.

Lilly: Cool. Interesting. Yeah. Actually one of the questions I was going to ask is, um, the platform that you mentioned that you’ve been using, which is fairly disruptive in under robotics, is there anything that you feel like it’s like. Missing that you wish you had, or that you wish that was being developed?

Brendan Englot: I guess. Well, you can always make these systems better by improving their ability to do dead reckoning when you don’t have helpful perceptual information. And I think for, for real, if we really want autonomous systems to be reliable in a whole variety of environments, they need to be O able to operate for long periods of time without useful.

Imagery without, you know, without achieving a loop closure. So if you can fit good inertial navigation sensors onto these systems, um, you know, it’s a matter of size and weight and cost. And so we actually are pretty excited. We very recently integrated a fiber optic gyro onto a blue ROV, um, which, but the li the limitation being the diameter of.

Kind of electronics enclosures that you can use, um, on, on that system, uh, we tried to fit the very best performing gyro that we could, and that has been such a difference maker in terms of how long we could operate, uh, and the rate of drift and error that accumulates when we’re trying to navigate in the absence of slam and helpful perceptual loop closures.

Um, prior to that, we did all of our dead reckoning, just using. Um, an acoustic navigation sensor called a, a Doppler velocity log, a DVL, which does C floor relative odometry. And then in addition to that, we just had a MEMS gyro. And, um, the upgrade from a MEMS gyro to a fiber optic gyro was a real difference maker.

And then in turn, of course you can go further up from there, but I guess folks that do really deep water, long duration missions, very feature, poor environments, where you could never use slam. They have no choice, but to rely on, um, high, you know, high performing Inns systems. That you could get any level of performance out for a certain out of, for a certain cost.

So I guess the question is where in that tradeoff space, do we wanna be to be able to deploy large quantities of these systems at relatively low cost? So, um, at least now we’re at a point where using a low cost customizable system, like the blue R V you can get, you can add something like a fiber optic gyro to it.

Lilly: Yeah. Cool. And when you talk about, um, deploying lots of these systems, how, what sort of, what size of team are you thinking about? Like single digits, like hundreds, um, for the ideal case,

Brendan Englot: Um, I guess one, one benchmark that I’ve always kept in mind since the time I was a PhD student, I was very lucky as a PhD student that I got to work on a relatively applied project where we had. The opportunity to talk to Navy divers who were really doing the underwater inspections. And they were kind of, uh, being com their performance was being compared against our robotic substitute, which of course was much slower, not capable of exceeding the performance of a Navy diver, but we heard from them that you need a team of 16 divers to inspect an aircraft carrier, you know, which is an enormous ship.

And it makes sense that you would need a team of that size to do it in a reasonable amount of. But I guess that’s, that’s the, the quantity I’m thinking of now, I guess, as a benchmark for how many robots would you need to inspect a very large piece of [00:25:00] infrastructure or, you know, a whole port, uh, port or Harbor region of a, of a city.

Um, you’d probably need somewhere in the teens of, uh, of robots. So that’s, that’s the quantity I’m thinking of, I guess, as an upper bound in the short term,

Lilly: okay. Cool. Good to know. And we’ve, we’ve talked a lot about underwater robotics, but I imagine that, and you mentioned earlier that this could be applied to any sort of GPS denied environment in many ways. Um, do you, does your group tend to constrain itself to underwater robotics? Just be, cause that’s sort of like the culture of things that you work on.

Um, and do you anticipate. Scaling out work on other types of environments as well. And which of those are you excited about?

Brendan Englot: Yeah. Um, we are, we are active in our work with ground platforms as well. And in fact, the, the way I originally got into it, because I did my PhD studies in underwater robotics, I guess that felt closest to home. And that’s kind of where I started from. When I started my own lab about eight years ago. And originally we started working with LIDAR equipped ground platforms, really just as a proxy platform, uh, as a range sensing robot where the LIDAR data was comparable to our sonar data.

Um, but it has really evolved in its and become its own, um, area of research in our lab. Uh, we work a lot with the clear path Jole platform and the Velodyne P. And find that that’s kind of a really nice, versatile combination to have all the capabilities of a self-driving car, you know, contained in a small package.

In our case, our campus is in an urban setting. That’s very dynamic. You know, safety is a concern. We wanna be able to take our platforms out into the city, drive them around and not have them propose a safety hazard to anyone. So we have been working with, I guess now we have three, uh, LIDAR equipped Jackal robots in our lab that we use in our ground robotics research.

And, um, there are, there are problems unique to that setting that we’ve been looking at. In that setting multi-robot slam is challenging because of kind of the embarrassment of riches that you. Dense volumes of LIDAR data streaming in where you would love to be able to share all that information across the team.

But even with wifi, you can’t do it. You, you know, you need to be selective. And so we’ve been thinking about ways you could use more actually in both settings, ground, and underwater, thinking about ways you could have compact descriptors that are easier to exchange and could let you make a decision about whether you wanna see all of the information, uh, that another robot.

And try to establish inter robot measurement constraints for slam. Um, another thing that’s challenging about ground robotics also is just understanding the safety and navigability of the terrain that you’re situated on. Um, even if it might seems simpler, maybe fewer degrees of freedom, understanding the Travers ability of the terrain, you know, is kind of an ongoing challenge and could be a dynamic situation.

So having reliable. Um, mapping and classification algorithms for that is important. Um, and then we’re also really interested in decision making in that setting and there, where we kind of begin to. What we’re seeing with autonomous cars, but being able to do that, maybe off road and in settings where you’re going in inside and outside of buildings or going into underground facilities, um, we’ve been relying increasingly on simulators to help train reinforcement learning systems to make decisions in that setting.

Uh, just because I guess. Those settings on the ground that are highly dynamic environments, full of other vehicles and people and scenes that are way more dynamic than what you’d find underwater. Uh, we find that those are really exciting stochastic environments, where you really may need something like reinforcement learning, cuz the environment will be, uh, very complex and you may, you may need to learn from experience.

So, um, even departing from our Jack platforms, we’ve been using simulators like car. To try to create synthetic driving cluttered driving scenarios that we can explore and use for training reinforcement learning algorithms. So I guess there’s been a little bit of a departure from, you know, fully embedded in the toughest parts of the field to now doing a little bit more work with simulators for reinforcement alert.

Lilly: I’m not familiar with Carla. What is.

Brendan Englot: Uh, it’s an urban driving. So you, you could basically use that in place of gazebo. Let’s say, um, as a, as a simulator that this it’s very specifically tailored toward road vehicles. So, um, we’ve tried to customize it and we have actually poured our Jack robots into Carla. Um, it was not the easiest thing to do, but if you’re interested in road vehicles and situations where you’re probably paying attention to and obeying the rules of the road, um, it’s a fantastic high fidelity simulator for capturing all kinda interesting.

Urban driving scenarios [00:30:00] involving other vehicles, traffic, pedestrians, different weather conditions, and it’s, it’s free and open source. So, um, definitely worth taking a look at if you’re interested in R in, uh, driving scenarios.

Lilly: Um, speaking of urban driving and pedestrians, since your lab group does so much with uncertainty, do you at all think about modeling people and what they will do? Or do you kind of leave that too? Like how does that work in a simulator? Are we close to being able to model people.

Brendan Englot: Yeah, I, I have not gotten to that yet. I mean, I, there definitely are a lot of researchers in the robotics community that are thinking about those problems of, uh, detecting and tracking and also predicting pod, um, pedestrian behavior. I think the prediction element of that is maybe one of the most exciting things so that vehicles can safely and reliably plan well enough ahead to make decisions in those really kind of cluttered urban setting.

Um, I can’t claim to be contributing anything new in that area, but I, but I’m paying close attention to it out of interest, cuz it certainly will be a comport, an important component to a full, fully autonomous system.

Lilly: Fascinating. And also getting back to, um, reinforcement learning and working in simulators. Do you find that there is enough, like you were saying earlier about sort of an embarrassment of riches when working with sensor data specifically, but do you find that when working with simulators, you have enough.

Different types of environments to test in and different training settings that you think that your learned decision making methods are gonna be reliable when moving them into the field.

Brendan Englot: That’s a great question. And I think, um, that’s something that, you know, is, is an active area of inquiry in, in the robotics community and, and in our lab as well. Cause we would ideally, we would love to capture kind of the minimal. Amount of training, ideally simulated training that a system might need to be fully equipped to go out into the real world.

And we have done some work in that area trying to understand, like, can we train a system, uh, allow it to do planning and decision making under uncertainty in Carla or in gazebo, and then transfer that to hardware and have the hardware go out and try to make decisions. Policy that it learned completely in the simulator.

Sometimes the answer is yes. And we’re very excited about that, but it is important many, many times the answer is no. And so, yeah, trying to better define the boundaries there and, um, Kind of get a better understanding of when, when additional training is needed, how to design these systems, uh, so that they can, you know, that that whole process can be streamlined.

Um, just as kind of an exciting area of inquiry. I think that that a, of folks in robotics are paying attention to right.

Lilly: Um, well, I just have one last question, which is, uh, did you always want to do robotics? Was this sort of a straight path in your career or did you what’s sort of, how, how did you get excited about this?

Brendan Englot: Um, yeah, it wasn’t something I always wanted to do mainly cuz it wasn’t something I always knew about. Um, I really wish, I guess, uh, first robotics competitions were not as prevalent when I was in, uh, in high school or middle school. It’s great that they’re so prevalent now, but it was really, uh, when I was an undergraduate, I got my first exposure to robotics and was just lucky that early enough in my studies, I.

An intro to robotics class. And I did my undergraduate studies in mechanical engineering at MIT, and I was very lucky to have these two world famous roboticists teaching my intro to robotics class, uh, John Leonard and Harry asada. And I had a chance to do some undergraduate research with, uh, professor asada after that.

So that was my first introduction to robotics as maybe a junior level, my undergraduate studies. Um, but after that I was hooked and wanted to working in that setting and graduate studies from there.

Lilly: and the rest is history

Brendan Englot: Yeah.

Lilly: Okay, great. Well, thank you so much for speaking with me. This is very interesting.

Brendan Englot: Yeah, my pleasure. Great speaking with you.

Lilly: Okay.

transcript

tags: Algorithm Controls, c-Research-Innovation, cx-Research-Innovation, podcast, Research, Service Professional Underwater

Lilly Clark

Credit: Source link

Comments are closed.