Researchers Developed SmoothNets For Optimizing Convolutional Neural Network (CNN) Architecture Design For Differentially Private Deep Learning

Differential privacy (DP) is used in machine learning to preserve the confidentiality of the information that forms the dataset. The most used algorithm to train deep neural networks with Differential Privacy is Differentially Private Stochastic Gradient Descent (DP-SGD), which requires clipping and noising of per-sample gradients. As a result, the model utility decreases compared to non-private training.

There are mainly two approaches to deal with this decrease in performance caused by DP-SGD. These two approaches are architectural modifications and training methods. The first technique aims to make the network structure more robust against the challenges of DP-SGD by modifying the model’s architecture. The second technique focuses on finding a suitable training strategy to minimize the negative effect of DP-SGD on accuracy. Only a few studies have examined specific concrete model design options that offer robustness against utility reductions for DP-SGD training. In this context, a German research team has recently proposed SmoothNet, a new deep architecture formed to reduce this performance loss.

The authors evaluated individual model components of widely used deep learning architectures concerning their influence on DP-SGD training performance. Then, based on this study, they distilled optimal components and assembled a new model architecture called SmoothNet, which produces SOTA results in differentially privately trained models on CIFAR-10 and ImageNette reference datasets. The evaluation study of the individual model components showed that the width to depth ratio correlates highly with model performance. In fact, the optimal width-depth ratio is higher for private training compared to non-private training. In addition, using residual and dense connections is beneficial for robust models where DP-SGD is employed. The authors also concluded that the SELU activation function performs better than RELU. Finally, Max Pooling showed superior results compared to other pooling functions.

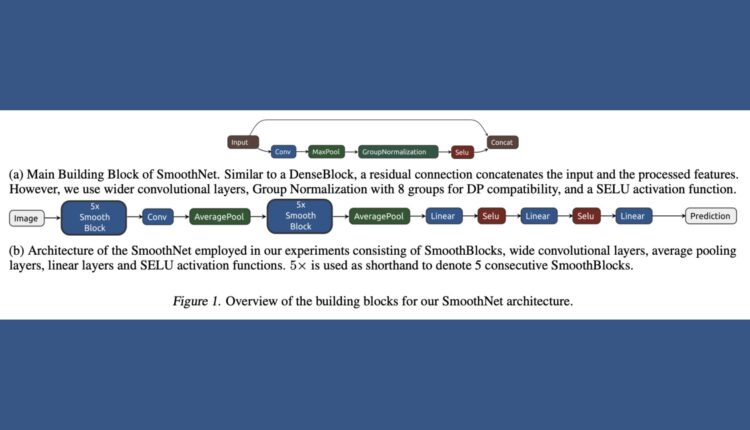

Based on the results cited above, the authors proposed a new architecture named SmoothNet. The core components are building blocks named Smooth-Blocks. DenseBlocks inspire these blocks, but with some modifications: The first modification is that the width of the 3×3 convolutional layers increases rapidly. The second modification is the use of Group Normalisation layers with eight groups instead of Batch Normalisation. Finally, the last modification is the use of SELU layers as activation functions. The depth of the network is limited to 10 SmoothBlocks. In addition, similar to DenseNets, the average pooling is implemented between SmoothBlocks. The extracted features are compressed to 2048 features, fed to the classifier block made by three linear layers, separated by SELU activation functions.

To validate the novel architecture, the authors performed an experimental study on CIFAR-10 and geNette, a subset of ImageNet. SmoothNet performances are compared to several standard architectures, such as ResNet-18, ResNet-34, t EfficientNet-B0, and DenseNet-121. Results demonstrate that SmoothNets achieves the highest performance in terms of validation accuracy when using DP-SGD.

In this article, we showed an investigation made to find optimal architectural choices for high-utility training of neural networks with DP guarantees. SmoothNet, a novel network, was proposed to deal with the performance decrease related to the use of DP-SGD during the training. Results proved that the proposed new network outperforms previous works, which follow the strategy of architectural modifications.

This Article is written as a research summary article by Marktechpost Staff based on the research paper 'SmoothNets: Optimizing CNN architecture design for differentially private deep learning'. All Credit For This Research Goes To Researchers on This Project. Check out the paper. Please Don't Forget To Join Our ML Subreddit

![]()

Mahmoud is a PhD researcher in machine learning. He also holds a

bachelor’s degree in physical science and a master’s degree in

telecommunications and networking systems. His current areas of

research concern computer vision, stock market prediction and deep

learning. He produced several scientific articles about person re-

identification and the study of the robustness and stability of deep

networks.

Credit: Source link

Comments are closed.