Researchers from Johns Hopkins and UC Santa Cruz Unveil D-iGPT: A Groundbreaking Advance in Image-Based AI Learning

Natural language processing (NLP) has entered a transformational period with the introduction of Large Language Models (LLMs), like the GPT series, setting new performance standards for various linguistic tasks. Autoregressive pretraining, which teaches models to forecast the most likely tokens in a sequence, is one of the main factors causing this amazing achievement. Because of this fundamental technique, the models can absorb a complex interaction between syntax and semantics, contributing to their exceptional ability to understand language like a person. Autoregressive pretraining has substantially contributed to computer vision in addition to NLP.

In computer vision, autoregressive pretraining was initially successful, but subsequent developments have shown a sharp paradigm change in favor of BERT-style pretraining. This shift is noteworthy, especially in light of the first results from iGPT, which showed that autoregressive and BERT-style pretraining performed similarly across various tasks. However, because of its greater effectiveness in visual representation learning, subsequent research has come to prefer BERT-style pretraining. For instance, MAE shows that a scalable approach to visual representation learning may be as simple as predicting the values of randomly masked pixels.

In this work, the Johns Hopkins University and UC Santa Cruz research team reexamined iGPT and questioned whether autoregressive pretraining can produce highly proficient vision learners, particularly when applied widely. Two important changes are incorporated into their process. First, the research team “tokenizes” photos into semantic tokens using BEiT, considering images are naturally noisy and redundant. This modification shifts the focus of the autoregressive prediction from pixels to semantic tokens, allowing for a more sophisticated comprehension of the interactions between various picture areas. Secondly, the research team adds a discriminative decoder to the generative decoder, which autoregressively predicts the subsequent semantic token.

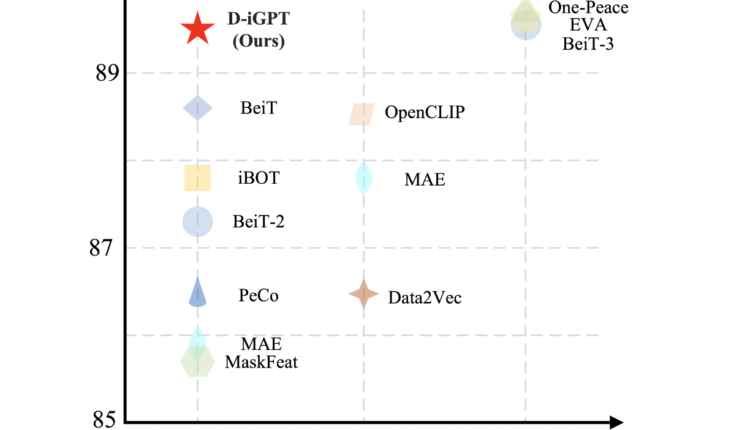

Predicting the semantic tokens of the seen pixels is the responsibility of this extra component. Furthermore, it’s interesting that models trained discriminatively, like CLIP, provide semantic visual tokens best suited for this pretraining pathway. The research team refers to this improved method as D-iGPT. The efficiency of their suggested D-iGPT is confirmed by extensive tests conducted on various datasets and tasks. Using ImageNet-1K as the only relevant dataset, their base-size model outperforms the prior state-of-the-art by 0.6%, achieving an 86.2% top-1 classification accuracy.

Additionally, their large-scale model achieves an 89.5% top-1 classification accuracy with 36 million publically available datasets. D-iGPT achieves performance comparable to earlier state-of-the-art training on public datasets, although with far less training data and lower model size. Using the same pretraining and fine-tuning dataset, the research team also analyzed D-iGPT on semantic segmentation, finding that it performs better than its MAE equivalents.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

![]()

Aneesh Tickoo is a consulting intern at MarktechPost. He is currently pursuing his undergraduate degree in Data Science and Artificial Intelligence from the Indian Institute of Technology(IIT), Bhilai. He spends most of his time working on projects aimed at harnessing the power of machine learning. His research interest is image processing and is passionate about building solutions around it. He loves to connect with people and collaborate on interesting projects.

Credit: Source link

Comments are closed.