UC Berkeley And Intel Labs AI Team Introduces GAN-Tuned And Physics-Based Noise Model That Boosts Video Quality In Dark

This Article Is Based On The Research Paper 'Dancing under the stars: video denoising in starlight'. All Credit For This Research Goes To The Researchers Of This Paper 👏👏👏 Please Don't Forget To Join Our ML Subreddit

Humans have acquired comparatively inferior night vision than mammals and birds that must hunt in the dark to live. Due to the minuscule amounts of light existing in the surroundings, seeing in the darkest situations (moonless, clear nights) is extremely difficult. Photographers might employ long exposure times (seconds or higher) in such dark circumstances to collect enough light from the scene. This method works well for still photos, but it has a low temporal resolution and cannot be used to image moving things.

Many photographers utilize cameras that can boost the gain, effectively increasing the sensitivity of each pixel to light. Shorter exposures are possible, but the amount of noise in each frame substantially increases. Image quality can be improved using denoising techniques in noisy photos.

A lot of deep denoising algorithms have been developed over time. While effective for some denoising applications, most of these methods rely on a simple noise model (Gaussian or Poisson-Gaussian noise), which is ineffective in extremely low-light conditions. When using a high sensor gain in low-light photos, the noise is frequently non-Gaussian, non-linear, sensor-specific, and difficult to predict or characterize. Denoising algorithms may fail if they don’t comprehend the noise structure in the images, mistaking the structured noise for a signal.

A recent paper, “Dancing Under the Stars: Video Denoising in Starlight,” published by UC Berkeley and Intel Labs team, uses a GAN-tuned, physics-based noise model that represents camera noise in low light circumstances and trains a unique denoiser that achieves photorealistic video denoising in starlight for the first time.

A bright day produces roughly 100 kilolux of lighting, whereas moonlight produces just about 1 lux. The researchers lowered their aims a few magnitudes lower, aiming for sub-millilux (starlight only) video denoising.

This is achieved by:

1. Setting the maximum gain level on a high-quality CMOS camera specialized for low-light images

2. A physics-inspired noise generator and easy-to-obtain still noisy pictures from the camera helped them learn the camera’s noise model.

3. They used the noise model’s produced synthetic clean/noisy video pairings to train their video denoiser.

Current deep learning-based approaches require a large number of image pairs to achieve good denoising efficiency in low light scenarios. In contrast, the proposed generator is trained on a small dataset of clean/noisy image bursts. Thereby, it does not require any additional experimental motion-aligned clean/noisy video clips. This method saves a lot of money on training while still delivering competitive results. The team uses a GAN-based adversarial setup in which a discriminator analyses the synthesized noisy pictures for realism to drive their noise generator to create new noise samples at each forward pass.

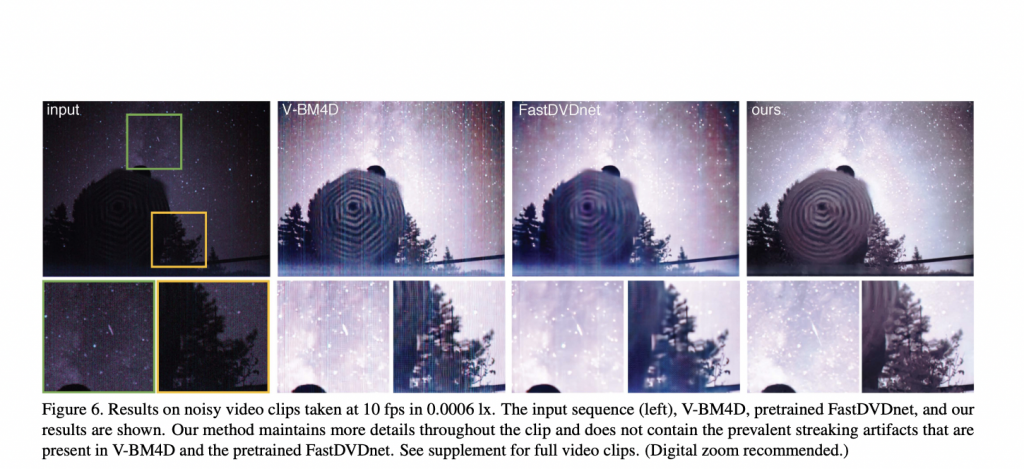

Researchers tested their noise generator and video denoiser pipeline against numerous current denoising systems and noise model baselines for low-light imaging. The suggested approach outperforms all baselines, delivering photorealistic video denoising in starlight.

This work demonstrates the power of deep-learning-based denoising in low-light situations. The team expects that their work will lead to more scientific breakthroughs in this field of computer vision research and improve robot vision capabilities in extremely dark environments.

Paper: https://arxiv.org/pdf/2204.04210.pdf

Credit: Source link

Comments are closed.