Human laughter as we know it likely developed between ten and 16 million years ago.

For context, the stone tools our distant human ancestors made in the Early Stone Age date back around 2.6 million years.

These are vast time spans, but it was perhaps good that our Palaeolithic ancestors had a sense of humour ready to deal with tech fails such as a blunt hammerstone.

Why does this matter? Well, let’s fast forward to today and our contemporary issues with technology, such as how to deal with the things we’ve made when they fail us. Anger is a common response (see the video below) – but tech companies would much rather harness the soothing power of laughter.

Social animals that we are, humans have built important societal functions around laughter in a thousand different ways.

Laughter can repair a conversation gone awry. It can signal that we support someone in a group or think we belong to a community. It can be a flirtation device or simply suggest benevolence when engaging with others. Some people use laughter to manufacture instant feelings of trust. Others laugh at a funerals.

The short-term effects of laughter are medically proven. It can send endorphins to the brain and reduce depression and anxiety symptoms. Laughter can even raise one’s pain thresholdby as much as 10%.

However, one of the social functions of laughter that interests tech giants and online app developers is its ability to soothe and to smooth. In an era in which we are increasingly reliant on digital devices and a rapidly growing online service industry, humour can be a potent form of stress relief.

Clearly, big industry players would prefer we hold on to our devices rather than angrily quitting or hitting them whenever an error 404 message appears. Or an update seems stuck at 10% completion. Laughter helps us to deal with these frustrating experiences.

If our virtual assistants, cybernetic robots, and digital avatars can emote a sense of humour that pleases us, the logic is that this will help us tolerate the irksome aspects of technology.

Datafied humour

Trying to reproduce laughter digitally comes with its own set of challenges. Tech companies start by understanding what we find funny – through analysing what we produce and interact with online. Think of the last thing that made you laugh. Chances are it was a pun. However, chances are also it wasn’t even a joke based on words.

This is where data and our reaction to it comes into play. One study found there is an 85% chance we’ll use the laughing-crying face emoji to react to something we find even remotely funny online. We deploy this versatile “face with tears of joy” to signal appreciation, share laughter, and reward our friends’ wit in chat groups. LOL anyone?

Yet each time we post a digital smiley, it creates a machine-readable tag. Think of it as a process of adding invisible writing to whatever it is we’re adding the emoji to – this is metadata or “data about data”.

We produce billions of those tell-tale tags each day. They allow algorithms to develop their own sense of human humour and perfect their funny-content-and-user matchmaking. The algorithms learn from our “likes”, (basically the business model of Meta, the company formerly known as Facebook).

It’s all about figuring out that personal taste profile – something that used to happen explicitly via surveys, but now can transpire invisibly without us even being asked.

There are many of these algorithms, working in many different ways, but we have only limited information about them. As with Netflix’s famed recommendation engine, exactly how an algorithm functions, more precisely its source code, is often a well-kept trade secret of the company that employs it to detect, analyse and recommend humorous content.

Here’s what we do know though.

Witscript, TikTok and Instagram

The purpose of these algorithms is to match us to something we personally find funny and keep us “glued” to our devices. But the kinds of datafied humour producing a virtual laugh adhesive can vary widely.

The current most commercially viable example of applying a humour AI to digital applications is the chatbot. Chatbots draw on vast amounts of language data sets, which are processed through machine learning and used to formulate text based on a user-given prompt or dialogue.

Encoding verbal humour this way into a chatbot’s algorithmic DNA has produced Witscript, a self-proclaimed “joke generator powered by artificial intelligence […] and the wit of a four-time Emmy-winning comedy writer”, Joe Toplyn.

Language-based joke generators like Witscript turn on the same generative AI principles as ChatGPT. Witscript’s originator claims

human evaluators judged Witscript’s responses to input sentences to be jokes more than 40% of the time. This is evidence that Witscript represents an important next step toward giving a chatbot a human-like sense of humor.

Meanwhile, TikTok is equipped with one of the best recommending engines in the business. The app’s average user typically spends a whopping 1.5 hours per day on the platform, which draws them in through an assemblage of algorithms creating TikTok’s For You page experience. It is mostly filled with viral videos, memes and other trending short-form comedy content.

By tracking not only our active, but also our passive behaviour

when we consume digital content, (for example how many times we loop a video, how quickly we scroll past certain content and whether we are drawn to a particular category of effects and sounds), the app infers how funny we find something. This then triggers a process of sending this content to other user profiles similar to ours. Their reactions set off another wave of digital shares – the basics of viral humour.

That TikTok’s automated humour pipeline just feels right to its mostly Gen Z users is underlined by the fact that 54 % of US teens said last year it would be hard to give up their connection to social media.

Instagram is another app that wants you to feel good about what it lets you do with its application features. Its react messages give us an animated flurry of smiles when our finger taps the phone screen to release a laugh cascade.

Live videos enable users to unleash a swirling mass of Quick Stream Reactions while watching, one option being big-toothed smiles.

This way of making tech feel less techy is eerily reminiscent of the canned laughs that floated out of the TV set and into our living rooms with every laugh-tracked sitcom made in the 1980s.

There is no end to the ingenuity with which we try to make each other, and ourselves, comfortingly laugh in real life. Why should our online world and our datafied selves that inhabit it not work that way too? And why stop at artificial apps, if we can have artificial people?

The avatars: ERICA, Jess, and Wendy

Laughter is one of the most ubiquitous and pleasurable things humans do. Just ask the international team of roboticists who built a synthetic humanoid named ERICA.

ERICA was designed to detect when you’re laughing. She would then decide whether to laugh in return and choose to reciprocate with either a chuckle or a giggle.

(If this sounds familiar, the sci-fi series Westworld depicts lifelike android “hosts” who populate a theme park and interact convincingly real with humans).

When we talked to Divesh Lala, one of ERICA’s creators, he told us the goal for this project (completed in 2022) was to add more humanness to robots. Or at least the semblance.

But laughter is a very complex human emotion to replicate – 16 million years, remember? So, the challenge to emulate a nonverbal human process in real-world situations was formidable.

ERICA may be 10-20 years away from laughing spontaneously and realistically at her humans, says Koji Inoue, assistant professor at Kyoto University’s Graduate School of Informatics and lead author on a paper describing the ERICA project.

But let’s look at the data that her AI framework was trained on. In this case, the Japanese research team used, or datafied, 80 speed-dating dialogues from a matchmaking session with Kyoto University students.

The double-edged sword here is, of course, that not all future users who interact with ERICA will laugh as if they were on a date. Yet, understanding this difference in setting, tone, intention, context, and social purpose, is what they would expect of a machine designed to look and sound like a laughing human.

This “fooling act” is the intention of the Japanese government’s Moonshot Research and Development program which aims to “tackle important social issues, including Japan’s shrinking and ageing societies, global climate change, and extreme natural disasters”. It provided funding to the ERICA team with the aim of making this emotional service android laugh convincingly in thousands of different, unique situations.

But an AI sense of humour is tricky to get right – as other avatar examples prove.

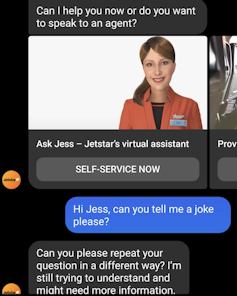

The inability of Jetstar Jess – the airline’s virtual interactive interface – to crack jokes in the self-help chat desk was all too obvious when she launched in 2013. Some chatters were more intent on trying to get a cheeky smile out of the avatar. She can now be found in Facebook messenger.

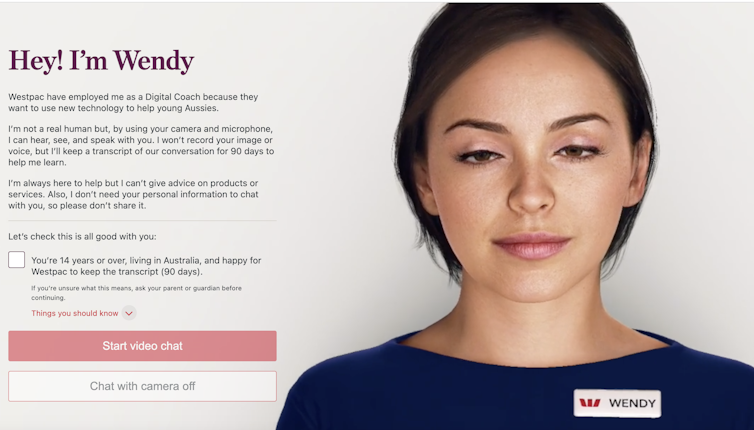

Meanwhile, the 3D-live-action-rendered Westpac Wendy, who says on the bank’s website that “Westpac have employed me as a Digital Coach because they want to use new technology to help young Aussies”, made her online debut a decade after Jess. She seems slightly better, with an improved ability to emote a more believable sense of humour.

Westpac’s AI technology allows the realistic rendering of Wendy’s face to smile in perfect unison with a computer-generated voice that tells PG-rated jokes when so asked. For instance, “I read a book on anti-gravity, I couldn’t put it down.”

While Wendy delivers her wit, her avatar face expresses a digitised version of a true Duchenne smile. This complex, concerted mobilisation of facial muscles around our mouth and our eyes reads as a genuine smile, compared to the social smile we give to others as common courtesy (developed as infants between six and eight weeks).

The race for replication is certainly on, with new Wendy avatars and many other humour-enabled androids appearing each year at tech expos. The AI scientists’ vision is of a future with artificial people who smile reassuringly back at us.

Here again, our use of online laughter is the key. These avatars are designed to feel as normal as programmers and web designers can possibly make them – but will they ever be as natural as the mirth of a four-year-old, who laughs on average 300 times a day?![]()

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Credit: Source link

Comments are closed.